Data engineering has evolved from supporting basic reporting needs to powering mission-critical business intelligence that drives competitive advantage. Organizations now depend on data infrastructure that can process petabytes of information, deliver real-time insights, and adapt to changing business requirements without manual intervention.

The field is experiencing fundamental shifts that go beyond incremental improvements. New technologies, methodologies, and organizational approaches are reshaping how enterprises architect, build, and maintain their data systems. Companies that understand and adopt these trends will gain significant advantages in speed, efficiency, and decision-making capability.

These changes aren’t just technical upgrades but they represent strategic transformations in how organizations think about data as a business asset. The trends emerging in 2026 enable faster innovation cycles, more personalized customer experiences, and operational efficiencies that directly impact bottom-line performance.

What is Data Engineering?

Data engineering encompasses the systems, processes, and infrastructure that collect, transform, and deliver data to support business intelligence, analytics, and decision-making across organizations.

Modern data engineering goes beyond traditional ETL processes to include real-time streaming, machine learning pipeline management, data quality monitoring, and self-service analytics platforms. These systems must handle structured and unstructured data from hundreds of sources while maintaining performance, security, and governance standards.

The discipline has become increasingly strategic as organizations recognize data as a competitive differentiator. Data engineers now design systems that enable business users to access insights independently, support machine learning models in production, and provide the foundation for AI-driven business processes.

Today’s data engineering challenges include managing exponential data growth, supporting real-time decision making, ensuring data privacy compliance, and creating scalable architectures that can adapt to changing business needs without requiring complete rebuilds.

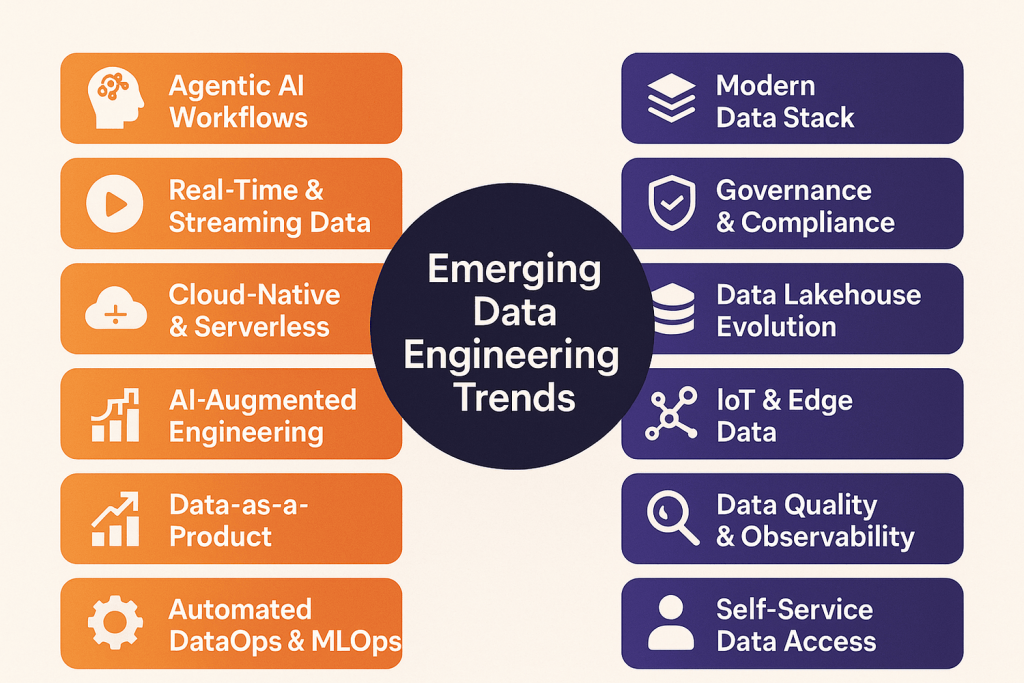

What Are the Emerging Data Engineering Trends Reshaping 2026?

The data engineering landscape is experiencing significant evolution across technology, processes, and organizational approaches that will define how enterprises handle data in the coming years.

Trend 1 – Rise of Agentic AI in Data Workflows

Agentic AI in data engineering is transforming data workflows by enabling autonomous pipeline management, intelligent data discovery, and self-optimizing infrastructure that reduces manual intervention requirements.

These AI systems can automatically detect schema changes in source systems, adjust transformation logic accordingly, and optimize processing workflows based on performance patterns.

Rather than waiting for human operators to identify and fix issues, agentic AI proactively manages data quality and pipeline performance. Many companies are already adopting AI in data engineering to automate schema evolution and pipeline optimization.

Major cloud providers are integrating agentic AI capabilities into their data platforms, with Amazon Web Services reporting 45% reduction in pipeline maintenance overhead for customers using AI-driven data management tools. Microsoft Azure’s autonomous data services can automatically scale resources, detect anomalies, and implement fixes without human intervention.

The impact extends beyond operational efficiency to strategic capability. Organizations using agentic AI in their data workflows can onboard new data sources 60% faster than traditional approaches, enabling more rapid response to business opportunities and market changes.

Trend 2 – Real-Time and Streaming Data Becomes the Norm

The benefits of real-time analytics have shifted real-time processing from specialized use cases to standard expectations across most business applications, driven by customer demands for immediate insights and personalized experiences.

Modern streaming architectures process millions of events per second while maintaining low latency and high availability. Apache Kafka, Apache Pulsar, and cloud-native streaming services enable organizations to build event-driven architectures that respond to business conditions as they occur.

Netflix processes over 500 billion events daily through their real-time data platform, enabling instant content recommendations and fraud detection. Financial services firms like JPMorgan Chase use streaming analytics to detect suspicious transactions within milliseconds of occurrence, preventing fraud before it impacts customers.

Trend 3 – Cloud-Native and Serverless Data Engineering

Serverless data engineering platforms support scalable infrastructure by eliminating management overhead while providing automatic scaling, pay-per-use pricing, and faster development cycles for data teams.

AWS Lambda, Google Cloud Functions, and Azure Functions enable data engineers to build processing workflows that scale automatically based on data volume and complexity.

These platforms handle infrastructure provisioning, monitoring, and optimization while teams focus on business logic and data transformation requirements. Serverless architectures reduce operational costs for organizations with variable data processing workloads. Companies no longer need to provision peak capacity for occasional high-volume periods, instead paying only for actual compute resources consumed.

The development speed advantages are equally significant. Data teams can deploy new processing workflows in minutes rather than weeks, enabling rapid experimentation and faster response to changing business requirements, especially when combined with cloud data integration that seamlessly connects diverse sources into serverless pipelines.

Trend 4 – AI-Augmented Data Engineering

AI augmentation helps data engineers write better code, identify optimization opportunities, and troubleshoot complex issues faster through intelligent assistance and automation tools.

GitHub Copilot and similar AI coding assistants now support data engineering languages and frameworks, helping engineers write SQL queries, Python data processing scripts, and configuration files more efficiently. These tools reduce development time while improving code quality through suggestions of best practices.

AI-powered data profiling tools automatically analyze data quality, identify patterns, and suggest appropriate data transformation techniques. In combination with AI data extraction, these tools can streamline the ingestion and preparation of large, complex datasets, ensuring cleaner inputs for downstream analytics.

DataRobot and similar platforms can recommend optimal data preprocessing approaches based on downstream analytics requirements and historical performance data.

Debugging and optimization benefit significantly from AI assistance. Tools like Datadog’s AI-powered anomaly detection can identify performance bottlenecks and suggest specific remediation steps, reducing mean time to resolution for data pipeline issues.

Trend 5 – Data-as-a-Product Mindset

Organizations are treating data like product offerings, complete with defined user experiences, service level agreements, and continuous improvement processes that prioritize business value delivery.

This approach means data teams create defined interfaces, documentation, and support processes for internal data consumers. Business users can access reliable, well-documented data products without needing to understand underlying technical complexity.

Spotify’s data platform team operates using product management principles, treating data scientists and business analysts as customers with specific needs and success metrics. This approach has reduced time-to-insight for new analytics projects while improving data adoption across business functions through systematic analytics optimization practices.

The product mindset also includes lifecycle management, with data products having roadmaps, version control, and retirement processes that ensure sustainable long-term value rather than one-time project deliveries.

Trend 6 – DataOps, MLOps, and Orchestration Automation

DataOps and MLOps practices bring software development methodologies to data engineering, enabling faster deployment cycles, better quality control, and more reliable production systems.

Automated testing, continuous integration, and deployment pipelines help data teams catch issues earlier and deploy changes more frequently without compromising system reliability. Tools like Apache Airflow, Prefect, and Dagster provide workflow orchestration that manages complex dependencies and error handling automatically.

Companies implementing DataOps report 50% fewer production data issues and 60% faster resolution times when problems do occur, according to statistics The combination of automated testing, monitoring, and deployment processes creates more stable and predictable data operations.

MLOps integration ensures that machine learning models receive fresh, high-quality data while model performance feedback influences data pipeline optimization. This closed-loop approach improves both data quality and model accuracy over time.

Many organizations now rely on specialized data engineering services to implement these practices at scale, ensuring robust and future-ready data operations.

Implement automation that accelerates delivery and improves quality.

Trend 7 – Modern Data Stack Maturity

The modern data stack has evolved from experimental tools to mature, integrated platforms that provide comprehensive data lifecycle management with proven scalability and reliability.

Tools like Snowflake, Databricks, and BigQuery now offer enterprise-grade features including fine-grained access controls, automatic optimization, and integrated machine learning capabilities. These platforms handle complex data workloads while providing familiar SQL interfaces for business users.

dbt (data build tool) has become standard for data transformation, enabling version control, testing, and documentation of data processing logic. The integration between different components has matured significantly. Modern data stacks provide seamless workflows from ingestion through visualization, reducing the complexity and maintenance overhead of managing multiple disparate tools.

Trend 8 – Data Governance, Security, and Compliance by Design

Data governance strategy and security are being built into data engineering architectures from the ground up rather than added as afterthoughts, driven by regulatory requirements and business risk management needs. Zero-trust security models require authentication and authorization for every data access, while encryption at rest and in transit becomes standard practice.

Tools like Apache Ranger and AWS Lake Formation provide fine-grained access controls that can restrict data access down to individual columns and rows. GDPR, CCPA, and other privacy regulations require organizations to track data lineage, implement right-to-be-forgotten capabilities, and demonstrate compliance through audit trails.

Modern data platforms include these capabilities as core features rather than add-on components. Automated compliance monitoring reduces manual audit preparation time while providing continuous assurance that data handling practices meet regulatory requirements. This proactive approach reduces compliance risk and associated business costs.

Trend 9 – Evolving Data Lakehouse Architecture

Data lake architecture and lakehouse designs combine the flexibility of data lakes with the performance and reliability of data warehouses, providing unified platforms for diverse analytical workloads.

Delta Lake, Apache Iceberg, and Apache Hudi enable ACID transactions, schema evolution, and time travel capabilities on data stored in object storage systems. This combination provides warehouse-like reliability with lake-like flexibility and cost efficiency.

Companies like Uber and Netflix use lakehouse architectures to support both batch analytics and real-time applications from the same data storage layer, reducing complexity and data duplication.

This unified approach eliminates the need to maintain separate systems for different analytical workloads. When choosing between data lakes vs data warehouses, lakehouses often provide the best of both worlds.

According to research, performance improvements from lakehouse architectures include 40% faster query execution and 60% reduced storage costs compared to traditional data warehouse approaches, while maintaining the flexibility to handle unstructured and semi-structured data.

Trend 10 – IoT and Edge Data Engineering

Internet of Things deployments generate massive data volumes at network edges, requiring new approaches to data collection, processing, and transmission that balance local intelligence with centralized analytics. Edge computing platforms process sensor data locally to reduce bandwidth requirements and enable real-time responses, while transmitting only relevant insights to central data platforms. Organizations often leverage Databricks consulting services to unify these edge and cloud data workflows, ensuring seamless integration and scalable analytics across environments.

Manufacturing companies like General Electric process equipment sensor data at factory locations to enable immediate maintenance decisions, while sending aggregated performance data to central systems for fleet-wide optimization and predictive analytics.

5G networks enable more sophisticated edge processing capabilities, supporting AI inference and complex analytics at edge locations that previously required cloud connectivity. This capability opens new possibilities for autonomous systems and real-time decision making.

Trend 11 – Data Quality & Observability as a First-Class Citizen

Data quality monitoring and observability have become integrated components of data infrastructure rather than separate tools, providing continuous visibility into data health and pipeline performance.

Modern data platforms include built-in data quality metrics, anomaly detection, and lineage tracking that provide immediate visibility when issues occur. Tools like Great Expectations, Monte Carlo, and Bigeye provide comprehensive data observability that prevents quality issues from impacting downstream analytics.

Proactive data quality monitoring prevents most data issues from reaching production analytics, according to industry research. Early detection enables rapid remediation before business users encounter problems or make decisions based on incorrect data.

Data lineage tracking becomes automatic rather than manual documentation, providing complete visibility into how data flows through complex processing pipelines.

Incorporating these approaches into data engineering best practices ensures that organizations maintain trustworthy, high-quality data pipelines that scale effectively.

Trend 12 – Data Democratization & Self-Service Analytics

Self-service business intelligence platforms enable business users to access and analyze data independently, reducing bottlenecks in data teams while improving business agility and decision-making speed.

Low-code and no-code tools like Tableau Prep, Power BI Dataflows, and Google Cloud’s AutoML enable business analysts to create data transformations and models without writing code. These tools maintain governance and quality controls while expanding access to data capabilities.

Business users can now create their own data marts, perform advanced analytics, and build predictive models using intuitive interfaces backed by enterprise-grade data infrastructure. This democratization reduces time-to-insight from weeks to days for many business questions.

Data literacy programs help organizations maximize the value of democratized analytics by training business users on best practices for data analysis, interpretation, and decision-making based on data insights.

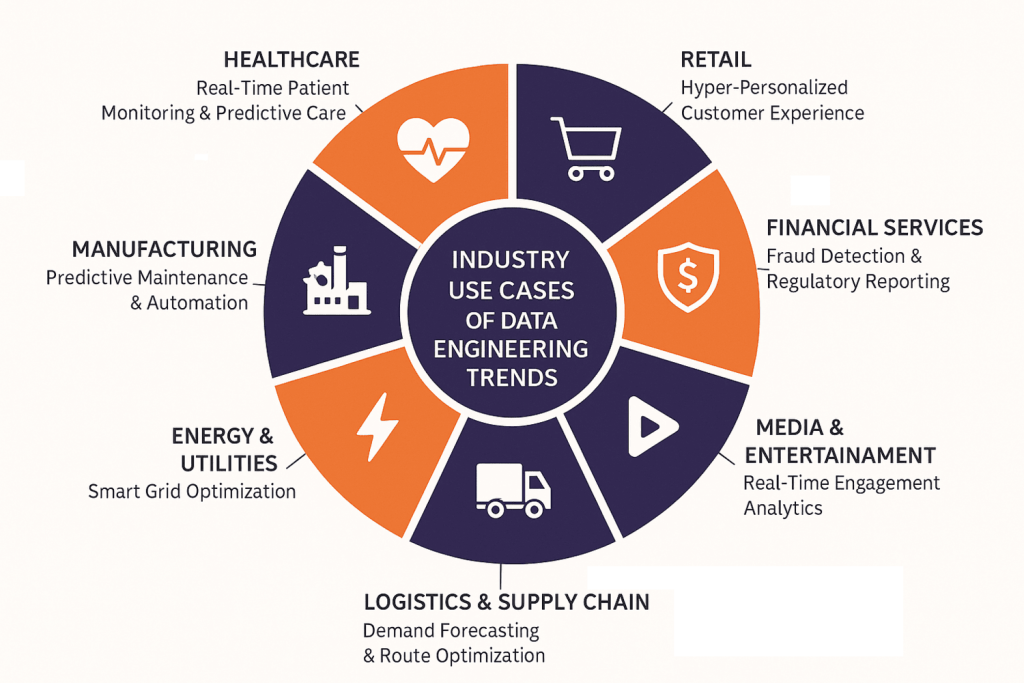

Industry Use Cases of Emerging Data Engineering Trends

Different sectors are adopting data engineering innovations in ways that address their specific operational challenges and competitive requirements.

Healthcare – Real-Time Patient Monitoring & Predictive Care

Healthcare organizations use streaming data architectures to monitor patient vital signs continuously and predict health complications before they become critical, improving patient outcomes while reducing costs.

Real-time analytics platforms process data from wearable devices, hospital monitors, and electronic health records to identify patients at risk of deterioration. Hospitals report reduction in emergency interventions through healthcare predictive analytics and monitoring systems.

Data lakehouse architectures enable healthcare systems to combine structured clinical data with unstructured notes, imaging data, and genomic information for comprehensive patient analytics. This unified approach supports precision medicine initiatives and clinical research.

Retail – Hyper-Personalized Customer Experience

Retail companies leverage real-time customer data to deliver personalized product recommendations, dynamic pricing, and targeted marketing that increases conversion rates and customer lifetime value.

Streaming analytics process customer behavior data to adjust website experiences in real-time, showing relevant products and offers based on current browsing patterns.

Modern data stacks integrate customer data from web interactions, mobile apps, in-store purchases, and social media to create comprehensive customer profiles that inform marketing automation, inventory planning decisions, and customer experience analytics initiatives.

Financial Services – Fraud Detection & Regulatory Reporting

Financial institutions use AI-augmented data engineering to detect fraudulent transactions within milliseconds while maintaining comprehensive audit trails for regulatory compliance requirements.

Machine learning models analyze transaction patterns in real-time to identify suspicious activity, reducing false positives by 60% compared to rule-based systems while catching more actual fraud attempts, according to research. Automated compliance reporting systems generate regulatory reports from real-time data streams, reducing manual preparation time from days to hours while ensuring accuracy and completeness of regulatory submissions.

Media & Entertainment – Real-Time Engagement Analytics

Media companies process viewer behavior data in real-time to optimize content recommendations, adjust streaming quality, and inform content production decisions based on audience engagement patterns.

Streaming platforms analyze viewer interactions continuously to predict churn risk and trigger retention campaigns, resulting in improvement in subscriber retention rates through proactive intervention.

Real-time data warehouses further enhance these efforts by allowing media teams to query fresh engagement data instantly, enabling faster decision-making and more personalized viewer experiences.

Data-as-a-product approaches also enable content creators to access audience insights through self-service analytics platforms, accelerating content development cycles and improving alignment with viewer preferences. Similar approaches are also being adopted in energy sectors, where digital oilfield solutions integrate real-time data streams to optimize operations and improve decision-making efficiency.

Logistics & Supply Chain – Demand Forecasting & Route Optimization

Logistics companies use IoT data engineering to track shipments in real-time, optimize delivery routes dynamically, and predict maintenance needs for transportation fleets.

Edge computing processes GPS, weather, and traffic data to adjust delivery routes automatically, reducing fuel costs and improving on-time delivery rates through responsive logistics optimization.

Predictive analytics combine historical demand patterns with external factors like weather and economic indicators to improve inventory planning, while predictive maintenance IoT solutions help transportation fleets minimize downtime and extend asset lifespan.

Energy & Utilities – Smart Grid Optimization

Utility companies process sensor data from smart meters, power generation equipment, and grid infrastructure to optimize energy distribution, predict equipment failures, and support renewable energy integration.

Real-time analytics enable automatic load balancing and outage response, reducing power disruption duration through faster detection and automated switching systems.

Data lakehouse architectures combine operational data with weather forecasts and energy market prices to optimize generation scheduling and energy trading decisions.

Many organizations also engage data integration consultants to design and implement these complex architectures, ensuring seamless integration of diverse data sources for more accurate forecasting and smarter energy management.

Solve real-world problems with modern data engineering.

Data Engineering Trends 2026 – Summary

In 2026, data engineering is evolving toward autonomous AI integration, real-time processing, and self-service analytics that enable faster, smarter decision-making. Key trends include agentic AI, cloud-native and serverless architectures, AI-augmented pipelines, data-as-a-product approaches, and mature modern data stacks. These innovations empower organizations to process vast data volumes efficiently, improve operational agility, enhance customer experiences, and maintain enterprise-grade governance. By adopting these trends, businesses can transform raw data into strategic intelligence, reduce operational overhead, and gain a competitive advantage across industries such as healthcare, retail, finance, media, logistics, and energy. Folio3 Data Services helps organizations implement these trends to build scalable, future-ready data solutions.

FAQs

What is the future of data engineering?

The future centers on autonomous, AI-driven systems that require minimal human intervention for routine operations while enabling faster innovation and business responsiveness. Organizations will move from managing infrastructure to designing intelligent data products that serve business needs automatically.

What are the top data engineering trends in 2026?

Key trends include agentic AI integration, universal real-time processing, serverless architectures, and data-as-a-product approaches. These trends focus on reducing operational overhead while increasing business value and enabling self-service analytics capabilities.

How is AI impacting data engineering?

AI transforms data engineering through autonomous pipeline management, intelligent code generation, and predictive optimization that reduces manual work by 40-60%. AI also enables better data quality monitoring, automatic schema evolution handling, and intelligent resource scaling.

What is the role of DataOps and MLOps in modern data engineering?

DataOps and MLOps bring software development practices to data systems, enabling faster deployment cycles, better quality control, and more reliable production operations. These practices reduce data issues by 50% while accelerating time-to-value for new data initiatives.

Conclusion

Data engineering trends in 2026 reflect a fundamental shift toward autonomous, intelligent systems that reduce operational overhead while increasing business agility. Organizations that adopt these trends will gain major advantages in speed, cost efficiency, and decision-making capability.

The convergence of AI augmentation, real-time processing, and self-service analytics creates opportunities for businesses to become truly data-driven without requiring massive technical teams or infrastructure investments. These trends democratize advanced data capabilities while maintaining enterprise-grade reliability and governance.

To fully capitalize on these trends, organizations need the right strategy and expertise. Folio3 Data Services helps businesses design and implement scalable, future-ready data solutions ensuring innovation, reliability, and long-term growth.