Data volumes have exploded across industries. IDC estimates the global datasphere will reach 175 zettabytes by 2025, with enterprises managing unprecedented amounts of structured and unstructured information. Traditional storage systems weren’t designed for this scale, and organizations now face real challenges: systems that can’t keep pace with growth, costs that spiral out of control, and performance bottlenecks that slow down analytics.

Big data storage refers to infrastructure designed specifically to handle massive datasets that traditional databases can’t manage efficiently. It’s built around three core principles: horizontal scalability (adding more machines rather than upgrading single servers), distributed processing across multiple nodes, and flexible schemas that accommodate various data types without rigid structures.

The shift from traditional storage isn’t just about capacity. Companies require systems that can ingest streaming data in real-time, support parallel processing for faster analytics, and scale cost-effectively as data volumes increase. When your storage can’t keep up, every downstream process suffers from analytics to machine learning to customer-facing applications.

What is Big Data Storage and Why Do Traditional Systems Fail?

Big data storage architecture handles datasets too large or complex for conventional database systems. We’re talking about petabytes and exabytes of information that needs to be stored, processed, and analyzed efficiently.

Traditional relational databases were built for structured data with predefined schemas. They scale vertically, meaning you upgrade to more powerful hardware as needed for additional capacity. This approach quickly reaches physical and economic limits. A single server can only get so powerful before costs become prohibitive.

There are specific reasons why traditional systems fail with big data:

- Scale limitations mean conventional databases struggle once you cross into terabyte and petabyte territory. They weren’t architected to distribute data across hundreds or thousands of machines. Performance degrades as tables grow, queries slow down, and maintenance becomes complex.

- Cost inefficiency surfaces when you’re forced into expensive hardware upgrades. Vertical scaling requires increasingly powerful servers, specialized storage arrays, and often proprietary solutions that lock you into vendor ecosystems. The math becomes increasingly difficult to comprehend at enormous data volumes.

- Flexibility constraints become apparent with diverse data types. Traditional systems expect structured, tabular data. When you’re dealing with JSON documents, images, video files, log data, and sensor readings all at once, rigid schemas become obstacles rather than organizational tools.

- Performance bottlenecks emerge under analytical workloads. OLTP databases were optimized for transactional operations, quick reads, and writes of individual records. Analytical queries that scan millions of rows bring traditional systems to their knees, especially when compared to modern, real-time data warehouses designed for instant insights and parallel query processing.

Big data storage technologies solve these problems through distributed architectures. Instead of one powerful machine, you have clusters of commodity hardware working in parallel. Data gets partitioned across nodes, processed where it lives, and replicated for fault tolerance. This approach scales horizontally that just adds more machines when you need more capacity.

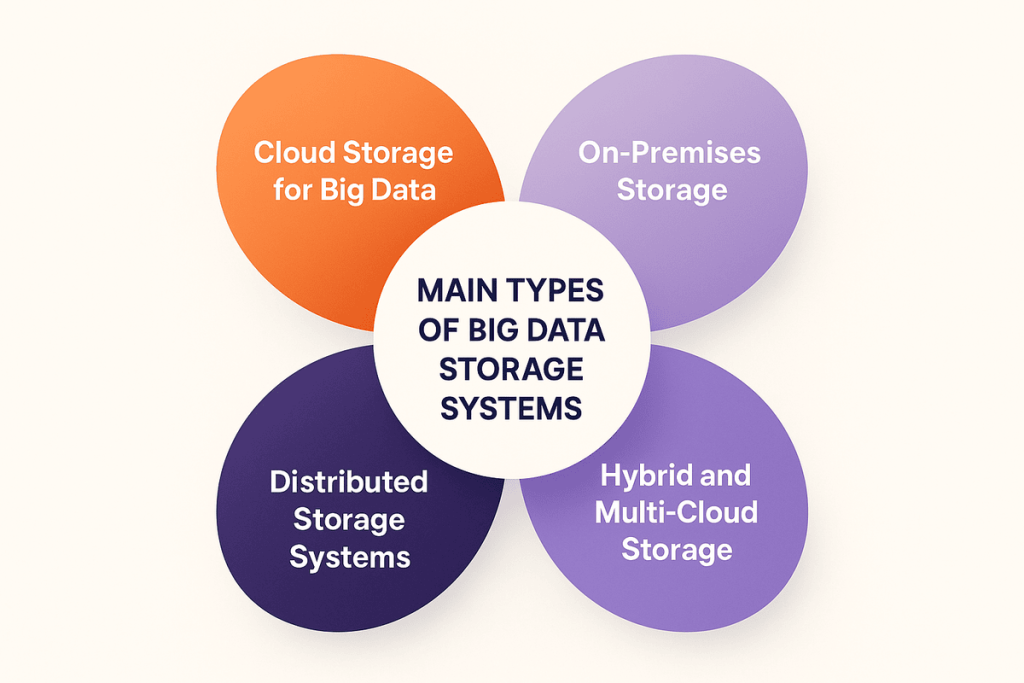

What Are the Major Types of Big Data Storage Systems?

Modern enterprises rely on big data storage to manage the massive influx of structured and unstructured data, driving analytics and innovation. The right combination of big data storage technologies enables organizations to balance cost, control, and performance in powering data-driven insights at scale.

So, choosing the exemplary big data storage architecture depends on factors such as scalability, compliance, and cost considerations.

Cloud Storage for Big Data

Big data cloud storage has become the standard for most organizations, offering elastic scalability, pay-as-you-go pricing, and minimal infrastructure management. Platforms like AWS, Azure, and Google Cloud deliver integrated analytics, object storage (e.g., Amazon S3), and data lake capabilities that support machine learning and business intelligence workloads. However, data egress costs, latency, and data residency regulations can pose challenges.

On-Premises Storage

Enterprises with strict compliance needs or predictable workloads may prefer on-premises big data storage solutions. Systems like Hadoop, HDFS, and Ceph provide full control, consistent performance, and low-latency access. The trade-off is higher capital costs and greater maintenance responsibility.

Hybrid and Multi-Cloud Storage

A hybrid model blends local and cloud resources, keeping sensitive data on-site while leveraging cloud for analytics. Multi-cloud setups prevent vendor lock-in and improve redundancy but require a strong data governance strategy and synchronization tools to ensure consistency, compliance, and unified visibility across environments.

Distributed Storage Systems

Distributed data storage for big data underpins most modern architectures. Technologies like HDFS, Ceph, and MinIO ensure scalability, reliability, and fault tolerance by spreading data across multiple nodes.

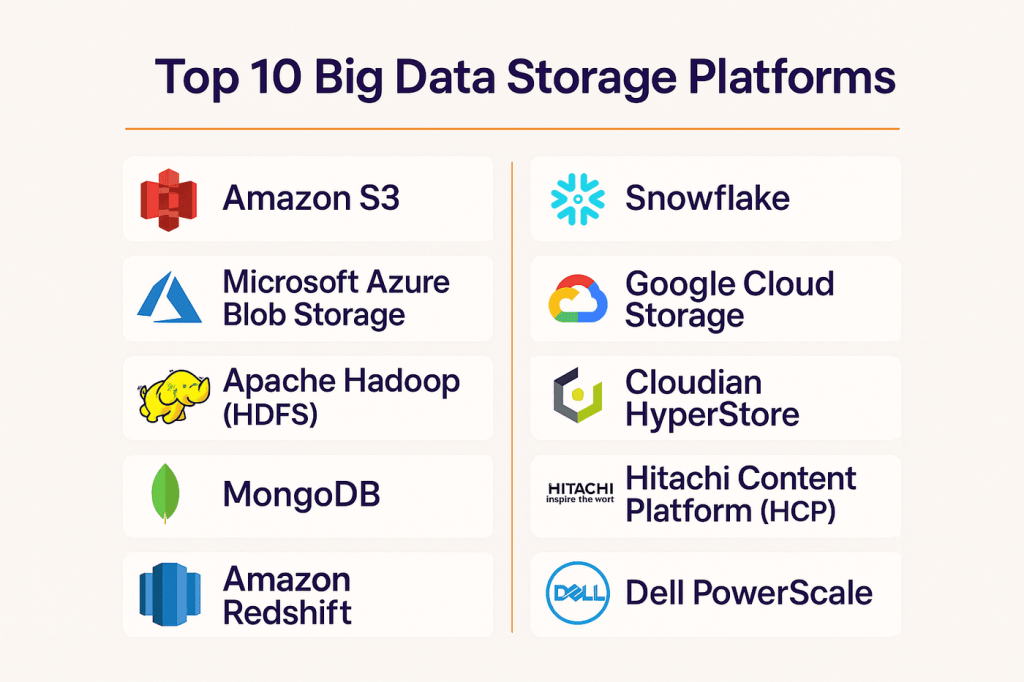

What Are the Top 10 Big Data Storage Solutions and Systems?

The market offers numerous options for big data cloud storage and on-premises deployment. Here’s what enterprises are actually using.

1. Amazon S3

Amazon’s Simple Storage Service has become synonymous with cloud object storage. It handles any data type, scales automatically, and integrates with the broader AWS ecosystem.

- Best for: Organizations already in AWS, data lakes, backup and archival, content distribution.

- Pros: Eleven nines of durability, extensive API support, intelligent tiering that automatically moves data between storage classes, and deep integration with analytics services like Athena and Redshift.

- Cons: Costs can escalate with data egress, performance varies by storage class, and vendor lock-in through proprietary features.

- Pricing: Starts at $0.023 per GB per month for standard storage, with discounts for infrequent access and archival tiers.

2. Microsoft Azure Blob Storage

Azure’s object storage service competes directly with S3 while offering tighter integration with Microsoft’s ecosystem. It supports hot, cool, and archive access tiers with automatic lifecycle management, making it a strong foundation for a modern data integration architecture that connects diverse analytics and application workloads.

- Best for: Enterprises using Microsoft products, hybrid cloud scenarios with Azure Stack, and media streaming applications.

- Pros: Strong Windows integration, competitive pricing, global replication options, support for Azure Data Lake Storage Gen2 for analytics workloads.

- Cons: Less mature ecosystem than AWS, some features lag behind S3, and documentation can be inconsistent.

- Pricing: Similar to S3, pricing starts around $0.018 per GB for hot storage, with lower tiers available for infrequent access.

3. Apache Hadoop (HDFS)

The Hadoop Distributed File System pioneered distributed storage for big data. While not as dominant as it once was, HDFS remains widely deployed in enterprises with on-premises infrastructure and is a key component of big data platforms.

- Best for: Batch processing workloads, organizations with existing Hadoop investments, situations requiring data locality for compute.

- Pros: Open source with no licensing costs, battle-tested at massive scale, strong ecosystem of tools, runs on commodity hardware.

- Cons: Requires significant operational expertise, not ideal for small files, limited real-time capabilities, and maintenance overhead.

- Pricing: Hardware and operational costs only, typically $1,000 to $3,000 per terabyte for fully loaded costs, including servers, networking, and personnel.

4. MongoDB

While primarily known as a NoSQL database, MongoDB stores massive amounts of unstructured and semi-structured data. Its document model handles complex, nested data structures that relational databases struggle with.

- Best for: Applications requiring flexible schemas, real-time analytics, content management systems,and IoT data storage.

- Pros: Intuitive document model, strong query capabilities, horizontal sharding for scalability, and a managed Atlas service that eliminates operational burden.

- Cons: Can be memory-intensive, joins across collections are less efficient than relational databases, and schema flexibility can lead to inconsistency without discipline.

- Pricing: Atlas starts with a free tier, followed by paid plans ranging from $0.08 per hour for small instances to thousands of dollars per month for production clusters.

5. Amazon Redshift

Redshift is part of a comprehensive data warehouse strategy rather than pure storage, but it’s how many organizations store and analyze structured big data. It’s optimized for analytical queries across petabyte-scale datasets.

- Best for: Business intelligence, SQL-based analytics, data warehousing, and organizations needing fast query performance on structured data.

- Pros: Columnar storage reduces I/O, massively parallel processing delivers fast query performance, integrates seamlessly with AWS services, and automatic compression.

- Cons: Not suitable for transactional workloads, requires data modeling expertise, costs add up quickly for large clusters, and less flexible than data lakes.

- Pricing: Starts at $0.25 per hour for small nodes for production workloads typically run $2,000 to $10,000+ monthly.

6. Snowflake

Snowflake has disrupted traditional data warehousing with a cloud-native architecture that separates compute from storage. You can scale each independently, paying only for what you use.

- Best for: Organizations wanting warehouse capabilities without infrastructure management, workloads with variable compute needs, and multi-cloud strategies.

- Pros: True separation of storage and compute, instant scaling, support for semi-structured data, and works across AWS, Azure, and GCP. The Snowflake architecture enables unique capabilities for modern data workloads.

- Cons: Can become expensive with inefficient queries, less control than self-managed solutions, and vendor-specific SQL extensions create some lock-in.

- Pricing: Storage around $40 per terabyte per month, compute charged by the second, starting at $2 per credit (roughly one hour of the smallest warehouse).

7. Google Cloud Storage

Google’s object storage emphasizes global availability and integration with its analytics platform. It offers unified storage classes and automatic tiering based on access patterns.

The platform also supports big data and knowledge management initiatives by allowing seamless connection between storage, analytics, and AI-driven insights.

- Best for: Organizations using Google Cloud, machine learning workloads with AI Platform, global content delivery, and integration with BigQuery.

- Pros: Strong consistency, excellent integration with Google’s analytics tools, competitive pricing for multi-regional storage, and simple lifecycle management.

- Cons: Smaller ecosystem than AWS, fewer third-party integrations, and data transfer costs between regions.

- Pricing: Standard storage starts at $0.020 per GB per month, with nearline and coldline options for less frequently accessed data.

8. Cloudian HyperStore

Cloudian provides S3-compatible object storage you can deploy on-premises or in private clouds. It’s designed for organizations wanting cloud-like storage without sending data off-site, making it a reliable foundation for building a secure data lake architecture that supports scalable analytics.

- Best for: Regulated industries, hybrid cloud architectures, organizations with compliance requirements preventing public cloud use.

- Pros: Full S3 API compatibility, deploy anywhere, no data egress fees, flexible capacity expansion.

- Cons: Requires infrastructure investment, operational responsibility, smaller community than public cloud alternatives.

- Pricing: Typically sold as appliances or software licenses, costs vary by deployment size but are generally competitive with public cloud at large scale.

9. Hitachi Content Platform (HCP)

HCP is an enterprise object storage platform designed for long-term data retention and compliance. It emphasizes immutability, security, and archival capabilities, often serving as a backbone for organizations leveraging data architecture services to build compliant and scalable storage ecosystems.

- Best suited for: Healthcare organizations, financial services, and regulated industries, as well as applications requiring WORM (write-once, read-many) storage.

- Pros: Strong compliance features, immutable storage options, multi-tenancy support, global namespace across distributed deployments.

- Cons: Enterprise-focused pricing, requires dedicated infrastructure, less suitable for frequently changing data.

- Pricing: Enterprise software licensing model, typically quoted based on capacity and feature requirements.

10. Dell PowerScale

Previously known as Isilon, PowerScale is a scale-out NAS platform that handles unstructured data at scale. It’s particularly strong for file-based workloads requiring high throughput.

- Best for: Media and entertainment, genomics, HPC environments, any workload requiring high-performance file storage.

- Pros: Linear scalability to tens of petabytes, the OneFS operating system provides a single namespace, and strong performance for large sequential I/O.

- Cons: Requires significant capital investment, is specialized for use cases, and is not ideal for object storage workloads.

- Pricing: Hardware purchase model, typically $1,500 to $3,000 per usable terabyte, depending on performance tier and configuration.

From data lakes to hybrid cloud models, Folio3 helps you build flexible, secure, and future-proof storage ecosystems.

How Should You Choose the Right Big Data Storage Solution?

Selecting the right big data storage solution means balancing performance, scalability, cost, and compliance. There’s no universal “best” option, only the one that aligns with your data strategy and business goals.

Data Volume and Growth

Estimate your current data footprint and expected growth. Cloud-based big data storage systems handle unpredictable scaling far better than on-premises setups, which require hardware planning and capital investment.

At the same time, storage solutions designed with big data predictive analytics in mind can help organizations forecast data growth patterns and optimize capacity planning. However, at petabyte scale, on-premises solutions may become more cost-efficient for long-term storage.

Data Types and Formats

Your big data storage options should match the type of data you manage. Structured data suits columnar databases like Redshift, while semi-structured formats (JSON, XML) fit document stores such as MongoDB. Unstructured data—images, videos, or IoT streams works best in object storage or data lakes that handle diverse formats with flexibility. Understanding data lakes and data warehouses helps in making the right choice.

Performance and Latency

For real-time analytics or applications requiring millisecond responses, local or hybrid storage is ideal. Batch analytics workloads, by contrast, can rely on cloud object storage without performance trade-offs.

Cost and Budget

Cloud storage offers elasticity but costs can add up due to data transfer and API charges. On-premises systems demand upfront investment but deliver predictable expenses and better economics for stable workloads.

Security and Compliance

Industries like healthcare or finance must ensure encryption, auditability, and regulatory compliance. Cloud providers offer certifications, but configuration errors can expose data making governance critical.

Integration with Analytics Tools

Ensure your big data storage architecture integrates seamlessly with analytics and enterprise business intelligence stack. If you’re leveraging AWS, pairing S3 with Redshift or Athena simplifies workflows. Similarly, Spark-based environments benefit from HDFS or S3-compatible storage for distributed processing. Favor open standards and APIs to avoid vendor lock-in and ensure flexibility as your infrastructure evolves.

How Does Big Data Differ from Traditional Data?

Understanding the differences helps clarify why specialized big data storage technologies are necessary. The shift from traditional to big data storage isn’t just about handling more information. It represents different assumptions about data structure, processing patterns, and acceptable trade-offs between consistency and availability.

| Aspect | Traditional Data | Big Data |

| Volume | Gigabytes to terabytes | Terabytes to exabytes |

| Velocity | Batch updates, scheduled processing | Real-time streams, continuous ingestion |

| Variety | Structured, predefined schemas | Structured, semi-structured, unstructured |

| Storage Architecture | Vertical scaling, single servers | Horizontal scaling, distributed clusters |

| Processing Model | Centralized, move data to compute | Distributed, move compute to data |

| Schema | Schema-on-write, rigid structure | Schema-on-read, flexible structure |

| Cost Model | Expensive specialized hardware | Commodity hardware or pay-per-use |

| Query Patterns | OLTP, transactional consistency | OLAP, eventual consistency acceptable |

| Data Sources | Internal business applications | IoT sensors, social media, logs, external feeds |

| Typical Tools | Oracle, SQL Server, MySQL | Hadoop, Spark, NoSQL databases, object storage |

What Are the 4 Major Challenges in Big Data Storage?

Implementing big data storage solutions comes with complex challenges that demand thoughtful architecture and governance.

1. Data Growth and Scalability

Data growth outpaces expectations, what begins as terabytes quickly scales to petabytes. The real challenge lies in maintaining performance as data expands. Traditional systems often slow down or hit scaling limits.

Modern big data storage architectures must scale horizontally and support lifecycle management policies to archive or delete unused data. Cloud platforms simplify scalability but require cost monitoring, while on-premises systems demand careful capacity planning to avoid both over-provisioning and shortages.

Partnering with experienced data engineering services providers can further ensure that scalability and architecture choices align with long-term data growth needs.

2. High Storage Costs and Optimization

At scale, even small per-gigabyte costs translate to millions annually. Cloud pricing tiers, transfer fees, and API charges can inflate bills unexpectedly. To optimize, organizations must align storage tiers with access frequency, automate lifecycle policies, and use compression or deduplication to minimize data footprint.

A well-planned data ingestion architecture also helps reduce redundant storage by managing how and when data enters the system. Proper optimization can cut costs by 30–50% without sacrificing accessibility.

3. Security, Privacy, and Compliance Risks

Centralized big data storage technologies attract security and compliance scrutiny. Data breaches lead to massive fines and trust erosion. Encryption, role-based access, and frequent audits mitigate risks, while adherence to GDPR, HIPAA, and PCI-DSS ensures regulatory compliance. Automated data retention and deletion rules help balance compliance with cost efficiency.

4. Performance and Latency Management

Big data analytics demands high throughput and low latency. Network bottlenecks, not disks, often limit performance. Optimizing data locality by processing data near where it resides improves speed, while caching and tiered storage maintain efficiency for mixed workloads. Integrating these practices within a big data pipeline helps ensure smoother data flow and consistent performance across analytics environments.

What Are 5 Best Practices for Big Data Storage Management?

Effective big data storage management isn’t a one-time setup but it’s an ongoing process that ensures scalability, performance, and cost efficiency across evolving data landscapes.

1. Data Compression and Deduplication

Enable compression by default using formats like Parquet and algorithms such as Zstandard to reduce storage costs by up to 70%. Deduplication whether at file or block level eliminates redundant data, especially in backups and shared repositories. Apply these optimizations during deployment to avoid expensive retrofitting later.

2. Automated Storage Tiering

Not all data requires high-performance storage. Tiering moves data automatically based on usage, where hot data stays on fast drives, while cold data shifts to cheaper archival tiers. Cloud platforms like AWS S3 Intelligent-Tiering automate this process, reducing costs while maintaining accessibility.

Many organizations rely on data integration consulting to align storage tiering with their data workflows and ensure analytics systems access the right data at the right performance level. On-premises systems can use policy-driven automation to achieve similar results.

3. Backup and Disaster Recovery

Replication alone isn’t enough. Follow the 3-2-1 rule: three data copies, on two media types, with one offsite. Regularly test restoration procedures to ensure reliability. This approach aligns with data migration strategy best practices.

For cloud environments, replicate across regions or providers to safeguard against outages or ransomware attacks.

4. Security and Access Control

Implement least-privilege policies using IAM roles instead of static credentials. Encrypt data at rest and in transit, and maintain audit trails with tools like AWS CloudTrail or Google Cloud Audit Logs. Consistent monitoring prevents unauthorized access and ensures compliance with regulations.

5. Cost Optimization

Monitor spending continuously using cloud cost analysis tools. Move infrequently accessed data to lower-cost tiers, delete unnecessary data, and commit to reserved capacity for predictable workloads.

Where is Big Data Storage Applied Across Industries?

Different sectors face unique storage challenges based on data types, volumes, and regulatory requirements.

E-commerce and Retail

In retail, big data storage enables large-scale personalization and real-time insights. Big data in the retail industry has transformed how companies understand customer behavior.

For instance, Walmart uses its Content Decision Platform to design personalized homepages and tailor shopping experiences across stores, mobile apps, and digital environments. By integrating vast amounts of customer, inventory, and behavioral data, retailers optimize pricing, product recommendations, and supply chain efficiency in real time. Retail data engineering plays a critical role in this transformation.

Healthcare

Big data storage in healthcare supports research, patient care, and drug development. Pfizer uses its Scientific Data Cloud to centralize massive research datasets and foster AI-driven innovation. With tools like VOX, researchers gain faster access to insights, reducing R&D time and costs.

This infrastructure, combined with the power of healthcare big data analytics, played a key role in Pfizer’s ability to launch 19 medicines and vaccines within 18 months, demonstrating how big data accelerates life sciences innovation.

Banking and Financial Services

In the financial sector, big data storage enables secure analytics for fraud detection, risk management, and personalized banking. JPMorgan Chase, for example, utilizes massive data repositories and AI simulators to analyze transaction patterns, detect anomalies, and prevent fraudulent activities in real time. These data-driven systems highlight the growing importance of data analytics in finance, improving security while enhancing customer trust and operational efficiency.

Entertainment and Media

The entertainment industry uses big data storage to enhance content personalization and viewer engagement. Netflix stores and analyzes petabytes of viewing data to recommend shows, optimize thumbnails, and predict audience preferences. Similarly, Spotify relies on large-scale data storage and analytics to generate customized playlists and music recommendations for its 246 million premium users boosting satisfaction and retention across platforms.

How Can Folio3 Help with Big Data Storage and Implementations?

Implementing an effective big data storage strategy demands expertise in infrastructure design, data engineering, and compliance. Folio3 Data Services provides complete, end-to-end support from architecture design to deployment, migration, and optimization helping businesses unlock the full potential of their data.

By combining technical expertise with platform-agnostic flexibility across Snowflake consulting, Databricks, AWS, and Azure, Folio3 helps organizations modernize data infrastructure, enhance analytics performance, and scale efficiently.

Custom Architectures

Folio3 tailors storage architectures to your business goals, data types, and regulatory requirements. Whether it’s a hybrid setup keeping sensitive data on-premises or a multi-cloud architecture to prevent vendor lock-in, our experts ensure the right balance between cost, scalability, and performance.

End-to-End Implementation

We manage the full implementation lifecycle, deploying cloud, on-premises, or hybrid infrastructure as part of a comprehensive big data implementation strategy.

Folio3 configures storage tiers, encryption, access control, and backup systems, ensuring smooth integration with your analytics and data platforms.

Data Migration and Modernization

Migrating from legacy systems to modern data platforms can be risky. Folio3 minimizes downtime through phased migration, integrity validation, and rollback plans. We also handle data warehouse to data lake migration, consolidating siloed systems into unified, high-performance cloud environments.

Scalability and Cost Optimization

To keep storage costs under control, Folio3 designs scalable systems with automated lifecycle policies, compression, deduplication, and right-sized storage tiers. Our cost optimization strategies, including reserved capacity and cloud discounts, help reduce expenses while maintaining reliability.

Security and Compliance

Folio3 implements enterprise-grade security measures encryption at rest and in transit, least-privilege access, audit logging, and regulatory compliance with standards like GDPR, HIPAA, and PCI-DSS.

Folio3 helps you optimize cloud and hybrid storage systems through automation, lifecycle policies, and cost-efficient scaling.

FAQs

What is big data storage and how does it work?

Big data storage is designed to manage massive, complex datasets using distributed architectures that scale horizontally for performance, redundancy, and fault tolerance.

What are the main challenges of big data storage management?

Key challenges in big data storage include managing data growth, optimizing costs, ensuring security and compliance, and maintaining performance across diverse workloads.

What types of big data storage solutions are available?

Common big data storage solutions include cloud object storage, data warehouses, distributed file systems, and NoSQL databases, each tailored to specific data and analytics needs.

How do companies choose the best big data storage strategy?

Companies choose big data storage strategies by assessing data volume, performance, security, cost, and integration needs, often adopting hybrid cloud architectures.

What industries benefit most from big data storage solutions?

Industries like healthcare, finance, e-commerce, IoT, and media use big data storage to manage large datasets, enhance analytics, and drive innovation.

What are the latest trends in big data storage technology?

Trends in big data storage include lakehouse architectures, intelligent tiering, edge computing, and cloud-native scalability for flexible, cost-efficient data management.

How secure is cloud-based big data storage?

Cloud-based big data storage offers strong encryption and compliance features but requires proper configuration and access control to prevent data breaches.

How much does big data storage cost for enterprises?

Big data storage costs depend on scale and typeNcloud storage averages $20–25 per terabyte monthly, while on-premises systems incur higher setup costs.

What is the difference between traditional storage and big data storage?

Traditional storage scales vertically and suits transactional workloads, while big data storage scales horizontally to handle vast, diverse datasets efficiently.

Can big data be stored in a relational database?

Relational databases struggle with big data scalability and flexibility, while modern big data storage solutions like Snowflake or Redshift handle large, diverse datasets efficiently.

Conclusion

Big data storage has evolved into a core enabler of analytics, AI, and business intelligence. Modern architectures cloud, on-premises, or hybrid allow organizations to balance scalability, cost, and compliance effectively. Success lies in choosing solutions tailored to specific workloads and growth needs. As data volumes surge, efficient storage becomes a strategic asset driving innovation and agility.

So, partner with Folio3 Data Services to design and implement secure, scalable, and cost-optimized big data storage solutions that turn your data into actionable insights and long-term business value.