Data engineering teams are under growing pressure to deliver faster, more accurate insights in the face of increasingly complex data environments. Yet, most still rely on traditional, rule-based automation and manual monitoring approaches that are reactive, labor-intensive, and struggle to scale with modern business demands.

Typical workflows require human intervention at nearly every stage, writing scripts, managing failures, tracking data quality issues, and reconfiguring pipelines as requirements change. This leads to bottlenecks, overnight alerts, and hours spent troubleshooting or rewriting logic just to keep pipelines operational.

Agentic AI introduces a transformational shift. Unlike conventional systems that follow static instructions, agentic AI operates with autonomous, goal-driven behavior. These systems assess context, make decisions, and take action to meet predefined objectives without needing constant human oversight.

In the context of data engineering, this intelligence redefines how teams manage pipelines, monitor quality, and optimize performance. Instead of scripting every scenario, engineers set high-level goals and constraints, letting the AI figure out the most efficient path forward.

The result is a move from reactive workflows to self-improving data systems. According to studies, organizations adopting agentic AI report up to 60% reductions in pipeline maintenance and 35% faster insights from new data sources, marking a clear evolution in modern data infrastructure.

The Intersection of Agentic AI and Data Engineering

The convergence of agentic AI and data engineering creates opportunities for autonomous data infrastructure that adapts to changing conditions without human intervention.

Traditional data engineering relies on predetermined workflows where every decision point requires explicit programming. If a data source changes format, someone must update the transformation code. If query performance degrades, someone must identify bottlenecks and optimize accordingly. This reactive approach creates operational bottlenecks and limits scalability.

Agentic AI in data engineering operates differently. These systems understand data pipeline objectives and can adjust their behavior to meet those goals despite changing conditions. When a data source introduces new fields, agentic AI systems can evaluate whether those fields are relevant to downstream processes and automatically incorporate them into transformation logic.

The intelligence extends beyond simple rule execution to include reasoning about trade-offs, learning from past decisions, and optimizing for multiple objectives simultaneously. An agentic AI system might balance processing speed against cost efficiency while ensuring data quality standards, making real-time decisions that human operators would need hours to evaluate.

This kind of autonomous decision-making highlights the growing importance of AI in Data Engineering, especially in complex environments where multiple systems interact and conditions evolve rapidly. Rather than relying on rigid automation that fails when assumptions shift, agentic AI systems adapt their behavior based on current conditions and accumulated experience.

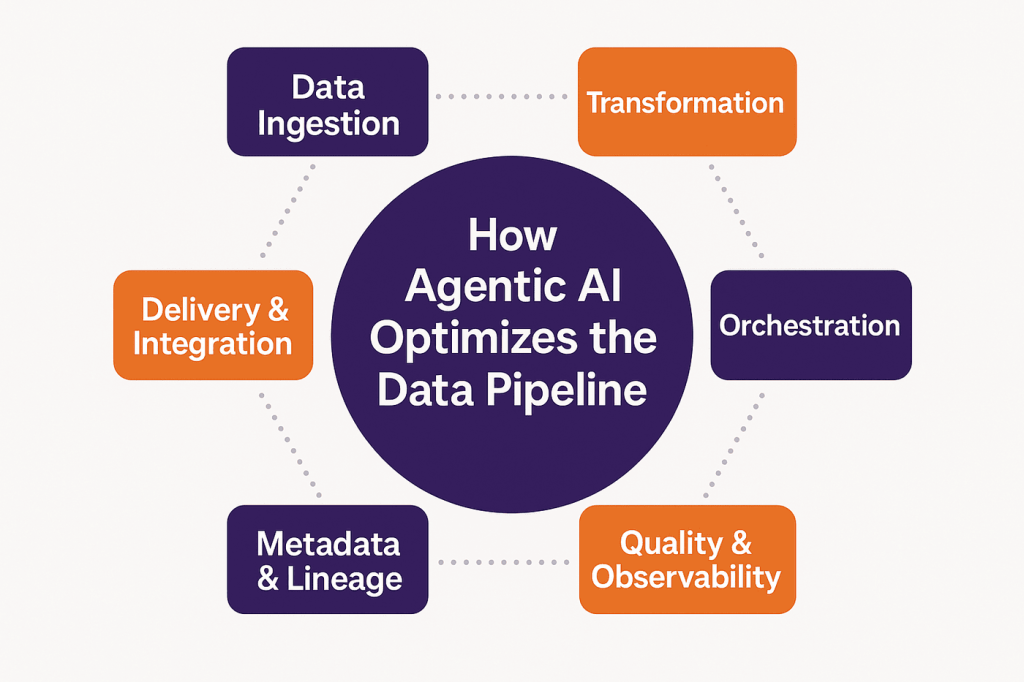

How Can Agentic AI Optimize Every Step of the Data Pipeline?

Agentic AI transforms each component of modern data pipelines through intelligent automation that goes beyond traditional rule-based approaches. Here’s how it optimizes every step of the data pipeline:

Data Ingestion

Agentic AI systems revolutionize data ingestion by automatically discovering, evaluating, and incorporating new data sources based on business objectives and data quality requirements.

Traditional ingestion processes require manual configuration for each new data source, defining schemas, setting up connection parameters, and creating error handling logic.

Agentic AI systems can analyze available data sources, assess their relevance to business goals, and automatically configure ingestion pipelines with appropriate quality checks and error handling, enabling a more adaptive and resilient data ingestion architecture.

These systems also adapt ingestion patterns based on source behavior. If an API starts returning data in different formats or at various volumes, agentic AI can adjust ingestion logic automatically rather than failing and requiring human intervention.

Real-time optimization becomes possible as agentic AI systems monitor ingestion performance and adjust parallelization, batching, and retry strategies based on current system load and data source characteristics. These adaptive capabilities extend beyond simple ingestion to tasks like AI-based data extraction, ensuring structured, usable information even when formats or volumes shift unexpectedly.

Data Transformation

Intelligent transformation capabilities enable agentic AI systems to understand data semantics and automatically generate transformation logic that adapts to schema changes and business rule evolution.

Rather than hardcoding transformation rules, agentic AI systems can infer transformation requirements from examples, business logic documentation, and downstream usage patterns. These systems apply advanced data transformation techniques to ensure consistency and accuracy, even as source data structures evolve over time.

Complex transformation scenarios benefit significantly from agentic approaches. Multi-source data integration, where relationships between sources may change over time, becomes manageable as AI systems can reason about data relationships and maintain logical consistency across transformations.

Performance optimization happens continuously as agentic AI systems monitor transformation execution and automatically refactor logic to improve efficiency without changing output semantics.

Data Orchestration and Workflow Management

Agentic AI transforms workflow orchestration from static scheduling to dynamic, intelligent resource allocation and execution optimization.

Traditional orchestration tools execute predefined workflows according to fixed schedules or simple trigger conditions. Agentic AI systems can analyze current system load, data freshness requirements, and downstream dependencies to optimize execution timing and resource allocation dynamically.

This shift reflects a broader evolution in data engineering and data analytics, where intelligence is embedded into orchestration layers to ensure responsiveness and alignment with real-time business needs.

Dependency management becomes intelligent as AI systems understand the business impact of different data assets and can prioritize workflows accordingly. Critical business reports might get priority over experimental analytics during peak processing periods.

Failure recovery evolves from simple retry logic to intelligent problem-solving. Agentic AI systems can analyze failure patterns, identify root causes, and implement fixes automatically or escalate to human operators with detailed context when automatic resolution isn’t possible.

Data Quality & Observability

Autonomous quality monitoring and observability create self-healing data systems that identify and resolve issues before they impact downstream processes.

Agentic AI systems learn standard data patterns and automatically detect anomalies that might indicate quality issues. Rather than relying on predefined quality rules that become outdated, these systems adapt their understanding of “normal” as data patterns evolve.

Root cause analysis becomes automated as AI systems trace quality issues through complex data lineages, identifying the specific transformation or source change that introduced problems. This capability dramatically reduces mean time to resolution for data quality incidents.

Proactive quality improvement emerges as agentic AI systems identify patterns that predict future quality issues and automatically implement preventive measures or alert teams before problems occur. These intelligent capabilities are increasingly being embedded into modern data engineering services to enhance reliability and trust in data pipelines.

Metadata Management and Lineage

Intelligent metadata management creates living documentation that stays current automatically while providing semantic understanding of data relationships.

Traditional metadata management requires manual effort to document data sources, transformations, and business meanings. Agentic AI systems can automatically discover metadata by analyzing data patterns, transformation logic, and usage contexts.

Semantic understanding develops as AI systems learn business terminology and can automatically tag data with relevant business context. This capability makes data discovery more intuitive for business users who don’t need to understand technical schemas.

Impact analysis becomes comprehensive as agentic AI systems maintain detailed lineage information and can predict downstream effects of proposed changes before implementation.

Data Delivery and Integration

Autonomous delivery systems optimize data access patterns and automatically create integration points based on consumption requirements.

Agentic AI systems can analyze how different teams and applications consume data, then automatically optimize storage formats, indexing strategies, and caching policies to improve performance and reduce costs.

These advanced capabilities are reshaping how organizations approach data integration consulting, as businesses now require strategic guidance to align AI-driven delivery systems with broader integration goals.

API generation becomes automatic as AI systems create appropriate data access interfaces based on consumption patterns and security requirements. Teams get the data access methods they need without manual API development.

Performance optimization happens continuously as agentic AI systems monitor usage patterns and automatically adjust delivery mechanisms to maintain optimal performance as consumption patterns change.

Benefits of Using Agentic AI in Data Engineering

The business impact of agentic AI data engineering extends across operational efficiency, cost management, and strategic capability development.

Increased Agility and Time-to-Insight

Agentic AI systems dramatically reduce the time required to incorporate new data sources and generate business insights. Traditional approaches might require weeks of development work to onboard new data sources, while agentic AI systems can automatically configure ingestion, transformation, and integration pipelines.

Business teams gain faster access to data-driven insights because agentic AI systems can understand business requirements expressed in natural language and automatically implement appropriate data processing workflows.

These advancements often serve as a foundation for broader generative AI implementation, enabling enterprises to further enhance automation, insight generation, and intelligent data interaction.

Experimentation becomes more feasible as data scientists and analysts can quickly test hypotheses with new data combinations without waiting for engineering resources to create custom pipelines.

Lower Operational Costs and Fewer Errors

Autonomous operation reduces the human effort required for routine data pipeline maintenance and monitoring. Teams can focus on strategic initiatives rather than responding to operational issues.

Error reduction occurs because agentic AI systems can catch and resolve many issues automatically, preventing cascading failures that would require extensive manual intervention in traditional systems.

Resource optimization becomes automatic as AI systems continuously tune processing resources based on current workloads and performance requirements, reducing cloud computing costs without sacrificing performance.

For cloud-native data platforms, this includes Snowflake cost optimization, where intelligent resource allocation ensures efficient usage of compute credits and storage to minimize expenses.

Enhanced Scalability and Flexibility

Agentic AI systems scale more effectively than traditional approaches because they can automatically adapt to changing data volumes and processing requirements without manual reconfiguration.

Multi-cloud and hybrid deployments become manageable as AI systems can optimize data placement and processing across different environments based on cost, performance, and compliance requirements.

Business requirement changes get accommodated more quickly because agentic AI systems can understand new requirements and automatically implement necessary pipeline modifications.

This adaptability is especially valuable for real-time data warehousing scenarios, where continuous updates and fast response times demand agile, self-adjusting data pipelines.

Stronger Data Trust, Compliance, and Visibility

Automated compliance monitoring ensures data processing adheres to regulatory requirements without manual oversight. Agentic AI systems can understand compliance rules and automatically implement necessary controls and audit trails.

By integrating with AI-powered enterprise search, organizations can instantly locate compliance reports, audit records, and data lineage documentation across complex systems, improving transparency and regulatory readiness.

Data lineage becomes comprehensive and accurate because AI systems automatically track all data transformations and dependencies, providing complete audit trails for compliance and troubleshooting.

Quality assurance improves because agentic AI systems provide continuous monitoring and can detect subtle quality degradation that human operators might miss.

From ingestion to delivery – Let AI do the heavy lifting.

Practical Challenges and Solutions in Agentic AI Adoption

Understanding the implementation of these challenges helps organizations prepare appropriate strategies and set realistic expectations for agentic AI adoption:

Trust, Transparency, and Control

Building trust in autonomous systems requires transparency about decision-making processes and maintaining appropriate human oversight capabilities.

Organizations need clear visibility into how agentic AI systems make decisions, especially for critical data processes that impact business operations. Explainable AI capabilities become essential for maintaining confidence in autonomous operations.

Control mechanisms must allow human operators to intervene when necessary while preserving the efficiency benefits of autonomous operation. This balance requires careful design of override capabilities and escalation procedures.

Gradual adoption strategies help build organizational confidence by starting with less critical processes and expanding agentic AI capabilities as teams gain experience and trust.

These efforts are particularly important in environments affected by data lake challenges, where issues like schema drift, poor metadata quality, and lack of governance have historically undermined data reliability.

Integration with Existing Tools and Platforms

Agentic AI systems must work effectively with existing data infrastructure rather than requiring complete technology stack replacement.

API compatibility ensures agentic AI systems can interact with current data storage, processing, and visualization tools without disrupting established workflows.

For many organizations using cloud-native architectures, Snowflake consulting services can help align agentic AI implementation with their current data platforms, ensuring optimized integration and minimal disruption.

Migration planning becomes critical for organizations with substantial investments in existing data infrastructure. Phased approaches that gradually introduce agentic capabilities while maintaining current systems reduce implementation risk.

Skills development helps existing teams adapt to working alongside agentic AI systems rather than replacing human expertise entirely.

Cost and Complexity of Adoption

Initial implementation requires significant investment in both technology and organizational change management.

Technology costs include AI platform licensing, compute resources for AI model execution, and integration development. Organizations need realistic budgeting that accounts for these ongoing operational expenses.

Complexity management requires dedicated expertise in both AI systems and data engineering to ensure successful implementation and ongoing optimization. For instance, industries adopting pet care data engineering solutions often need tailored integration approaches to unify IoT health trackers, veterinary data, and customer engagement systems—showing how domain-specific expertise reduces risks and improves adoption outcomes.

Teams must also evaluate existing data integration techniques to ensure compatibility with agentic AI systems and identify where automation can streamline or replace manual processes.

Return on investment planning helps organizations set appropriate expectations and measure success throughout the adoption process.

Governance and Auditability

Autonomous systems require new governance frameworks that maintain accountability while enabling AI-driven decision-making.

A modern data governance strategy becomes essential to ensure AI systems operate within acceptable risk boundaries and comply with regulatory requirements.

Audit trail requirements become more complex when AI systems make autonomous decisions. Organizations need comprehensive logging and decision tracking capabilities to support compliance and troubleshooting.

Policy enforcement must adapt to autonomous operation while maintaining appropriate controls over data access, processing, and distribution.

Risk management frameworks need updates to address the unique risks associated with autonomous AI systems while capturing the benefits of reduced human error and improved consistency.

Agentic AI Adoption: Comparison of Challenges and Solutions

| Challenge Area | Key Challenges | Solutions |

|---|---|---|

| Trust, Transparency, and Control | – Lack of explainability in AI decisions – Risk of loss of human oversight – Low confidence in autonomous processes | – Implement Explainable AI for transparency in decision-making – Design override mechanisms and escalation protocols – Use gradual adoption in low-risk processes to build organizational trust |

| Integration with Existing Systems | – Disruption risk due to incompatibility with current tools – Inflexible legacy systems and siloed architectures | – Ensure API compatibility for seamless integration – Use phased implementation to minimize disruption – Leverage services (e.g., Snowflake consulting) to align agentic AI with cloud-native architectures |

| Cost and Complexity of Adoption | – High upfront technology and operational costs – Need for specialized skills – Difficulty in identifying automation opportunities | – Plan for realistic budgeting that includes ongoing costs – Invest in skills development for existing teams – Analyze current integration processes to identify areas for agentic automation and optimization |

| Governance and Auditability | – Lack of accountability in autonomous decisions – Complex audit trail requirements – Legacy governance frameworks not designed for AI | – Develop a modern governance strategy that includes AI oversight – Build comprehensive logging and tracking systems – Update risk management and policy enforcement to align with agentic AI operations |

What Does the Future Hold for Agentic AI in Data Engineering?

The evolution of agentic AI capabilities will reshape data engineering practices and organizational structures over the next decade. The future of agentic AI in data engineering might hold:

Autonomous Data Platforms

Future data platforms will operate with minimal human intervention, automatically scaling resources, optimizing performance, and adapting to changing business requirements.

These advancements will build on foundational data engineering best practices, such as modular architecture, observability, and automated workflows, to support even greater levels of autonomy and resilience.

Self-healing capabilities will extend beyond simple error recovery to include predictive maintenance, automatic performance optimization, and proactive capacity planning.

Business alignment will improve as autonomous platforms learn organizational priorities and automatically optimize data processing to support business objectives without explicit configuration.

Multi-Agent Collaboration

Complex data environments will employ multiple specialized AI agents that collaborate to manage different aspects of data infrastructure.

Specialized agents might focus on specific functions like quality monitoring, performance optimization, or compliance management while coordinating their activities to achieve overall system objectives.

These agents can also support a robust data migration strategy by intelligently coordinating the movement of data across systems, ensuring consistency, minimizing downtime, and adapting to evolving schema and platform requirements.

Negotiation capabilities will enable agents to resolve conflicts and optimize trade-offs between competing objectives, such as cost minimization and performance maximization.

Agentic AI in MLOps

Machine learning operations will benefit from agentic AI systems that automatically manage model training, deployment, and monitoring workflows.

Continuous learning will enable AI systems to improve their data engineering capabilities based on experience, creating a self-improving data infrastructure.

Model lifecycle management will become autonomous as AI systems handle feature engineering, model selection, and deployment optimization based on performance metrics and business requirements.

These intelligent workflows also support AI customer analytics use cases, where real-time insights, behavioral predictions, and personalized experiences require seamless integration between data pipelines and machine learning models.

Redefining Data Engineering

The role of data engineers will evolve from hands-on pipeline development to strategic architecture and AI system governance.

Human expertise will focus on defining objectives, setting constraints, and ensuring AI systems align with business goals rather than implementing detailed technical solutions.

As agentic AI takes over operational tasks, engineers will play a larger role in designing robust data integration architecture that supports adaptability, scalability, and alignment with evolving business needs.

Strategic planning will become more critical as organizations leverage autonomous capabilities to explore new data opportunities and business models that were previously unfeasible with traditional approaches.

Build scalable pipelines for faster insights.

FAQs

How is Agentic AI different from traditional automation or AI tools?

Traditional automation follows predefined rules and fails when conditions change beyond programmed parameters. Agentic AI systems reason about new situations, make autonomous decisions, and adapt their behavior without human reprogramming.

Which parts of the data pipeline can Agentic AI optimize?

Agentic AI optimizes every pipeline component from data discovery through delivery, including ingestion, transformation, orchestration, quality monitoring, and metadata management. The biggest impact occurs in areas requiring human decision-making, like schema changes and performance optimization.

What are some real-world examples of Agentic AI in action?

Netflix uses agentic AI to automatically optimize data workflows based on viewing patterns, while financial firms deploy autonomous fraud detection that adapts to new attack patterns. E-commerce platforms automatically integrate supplier data sources as formats change.

How can organizations start adopting Agentic AI?

Start with pilot projects in non-critical areas like data quality monitoring to demonstrate value without risk. Establish governance frameworks and focus on use cases where manual processes create bottlenecks or traditional automation frequently fails.

Conclusion

Agentic AI data engineering transforms reactive data management into proactive, intelligent systems that adapt automatically—organizations implementing autonomous data infrastructure report significant operational efficiency gains while reducing costs and improving data quality.

Early adopters gain competitive advantages through self-improving systems that handle routine operations while humans focus on strategic initiatives. The shift from manual to autonomous data operations is inevitable for enterprises serious about data-driven strategies.

Companies exploring agentic AI data engineering solutions can benefit from expert guidance to navigate implementation challenges and maximize ROI.

Our specialized data engineering team helps organizations design and deploy autonomous data systems that align with business objectives while ensuring scalability and governance. Through Folio3 Data Services, businesses can accelerate their adoption of agentic AI, streamline data operations, and build future-ready architectures that balance automation with compliance and control.