The modern enterprise data landscape has crystallized around two dominant platforms: Snowflake and Databricks. While both promise to transform how organizations handle data, they take fundamentally different approaches to solving enterprise data challenges. This comparison is crucial because selecting the wrong platform can result in millions of dollars in migration expenses, limit your analytics capabilities, and create technical debt that persists for years.

This guide examines both platforms through an enterprise lens, comparing their architectures, capabilities, costs, and real-world performance to help you make an informed decision for your organization’s data strategy.

What is Databricks?

Databricks represents the evolution of big data processing, built by the original creators of Apache Spark to address the fragmentation between data engineering, data science, and analytics teams.

Core Features and Architecture

The platform centers around the concept of a “lakehouse”, combining the flexibility and cost-effectiveness of data lakes with the performance and reliability of data warehouses. This unified approach not only simplifies infrastructure but also supports a more effective data lake strategy, eliminating the need to maintain separate systems for different types of data processing.

Collaborative notebooks form the heart of the Databricks experience, allowing teams to work together using Python, R, Scala, and SQL within a single environment. These notebooks integrate directly with version control systems and support real-time collaboration, breaking down traditional silos between technical teams.

MLflow, Databricks’ open-source machine learning lifecycle management platform, handles everything from experiment tracking to model deployment and monitoring. This integrated approach eliminates the complexity of managing separate tools for different stages of the ML pipeline.

Delta Lake provides ACID transactions, data versioning, and schema enforcement for data lakes, addressing traditional reliability issues that have plagued large-scale data processing. This technology ensures data quality and enables time travel queries for auditing and debugging.

Primary Use Cases

Organizations typically deploy Databricks for advanced analytics use cases that require processing large volumes of diverse data types. Real-time streaming analytics, complex ETL processes, and machine learning model development represent the platform’s sweet spot.

The platform excels in scenarios requiring real-time decision-making, such as fraud detection systems that must analyze transactions within milliseconds or recommendation engines that personalize content for millions of users simultaneously.

Data engineering teams utilize Databricks to construct sophisticated data pipelines that can handle both structured and unstructured data, ranging from traditional database records to sensor data, logs, and multimedia files.

Key Benefits

Databricks offers a unified and collaborative environment that simplifies data operations, enhances scalability, and accelerates innovation. Here are the top benefits of using the platform:

- Reduced Operational Complexity: A single, unified platform eliminates the need to move data between different systems. Teams can process raw data, apply transformations, build ML models, and generate insights all in one place.

- Auto-Scaling Capabilities: Databricks automatically adjusts compute resources based on workload requirements, ensuring optimized performance and cost-efficiency. It can seamlessly scale from a single node to clusters with hundreds of nodes without manual effort.

- Collaborative Environment: The platform fosters real-time collaboration among data scientists, engineers, and analysts, allowing them to share code, insights, and results. This reduces time-to-insight and drives faster innovation.

What is Snowflake?

Snowflake reimagined the data warehouse for the cloud era, building a platform specifically designed to handle the scale, performance, and concurrency demands of modern enterprises.

Core Features and Architecture

At the heart of the platform is the Snowflake data architecture, which separates storage, compute, and services into independent layers. This separation allows organizations to scale each component independently, optimizing for their specific workload patterns and cost requirements.

Among the most valuable Snowflake features are its automatic optimization capabilities, which handle query performance tuning, data compression, and resource allocation without manual intervention. Advanced features, such as automatic clustering, materialized views, and result caching, work behind the scenes to accelerate query performance.

A multi-cluster shared data architecture enables multiple teams to run concurrent workloads without interfering with one another. Marketing teams can run their campaign analysis while finance processes quarterly reports, each using appropriately sized compute resources.

Zero-copy cloning enables organizations to create instant copies of databases, schemas, or tables without incurring additional storage costs. This capability allows for efficient testing, development, and data sharing workflows.

Primary Use Cases

Snowflake excels as the central data warehouse for enterprise analytics, supporting traditional business intelligence use cases alongside modern analytics requirements. The platform supports high-concurrency environments, where hundreds or thousands of users require simultaneous access to data.

Data sharing represents a unique strength, enabling organizations to securely exchange data with partners, customers, or subsidiaries without requiring data duplication or movement. This capability allows for new business models and monetization opportunities.

The platform also plays a critical role in enterprise data integration, serving as the foundation for unifying data across departments and systems. By providing business users with direct access through familiar SQL interfaces and seamless integration with popular BI tools, Snowflake enables truly self-service analytics initiatives.

Core Advantages

Snowflake simplifies data management while offering cost efficiency and accessibility, making it a strong choice for modern businesses. Key advantages include:

- Ease of Use: With minimal setup and maintenance, Snowflake stands out compared to traditional data lakes and data warehouses. It automatically manages infrastructure, optimization, and scaling, reducing operational overhead.

- Cost Efficiency: Per-second billing and automatic resource suspension ensure organizations only pay for active compute usage. This eliminates idle-time charges and makes costs more predictable.

- SQL-First Approach: Snowflake’s SQL-based design makes it accessible to analysts and business users familiar with SQL, thereby minimizing training needs and accelerating adoption across teams.

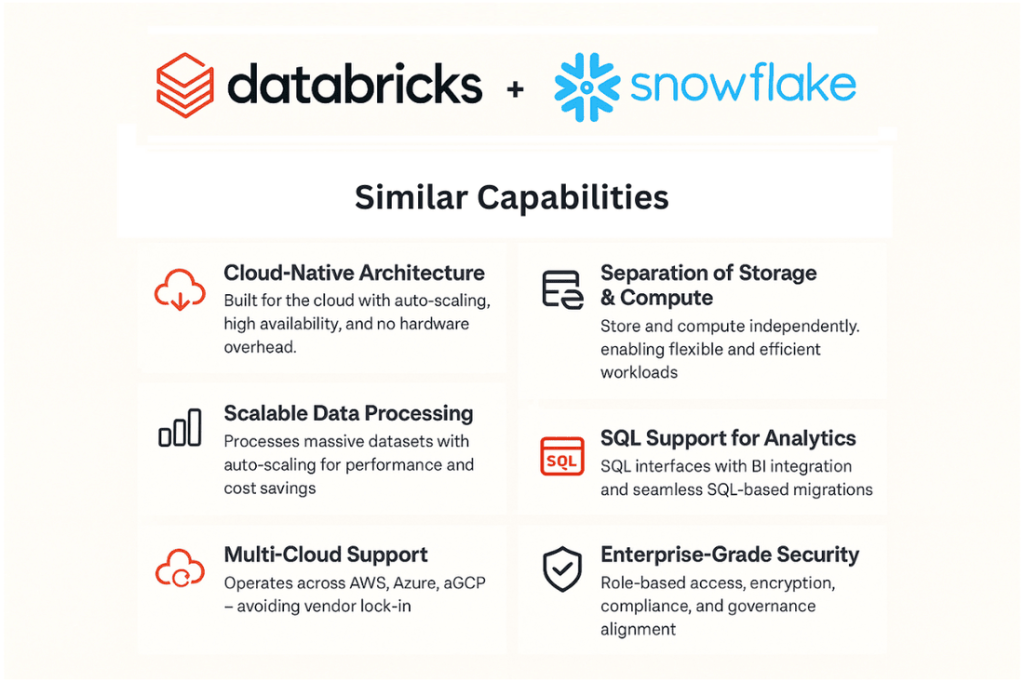

Key Similarities Between Databricks and Snowflake

Despite their different approaches, both platforms share fundamental characteristics that reflect modern enterprise requirements, such as:

1. Cloud-Native Architecture

Both platforms were built specifically for cloud environments, providing automatic scaling, high availability, and global deployment capabilities. This cloud-native design eliminates the infrastructure management overhead associated with traditional data platforms while ensuring a scalable infrastructure that grows seamlessly with business needs.

Neither platform requires upfront hardware investments or capacity planning. Organizations can start with minimal resources and scale to petabytes of data without significant architectural changes.

2. Scalable Data Processing Capabilities

Both platforms handle massive datasets with linear scalability. Whether processing terabytes or petabytes of data, both can automatically distribute workloads across multiple compute resources to maintain performance.

Auto-scaling capabilities adjust resources based on demand, ensuring consistent performance during peak usage periods while optimizing costs during periods of low demand. Through built-in Snowflake performance optimization, the platform automatically accelerates queries and scales resources as needed, minimizing the need for manual tuning.

3. Multi-Cloud Support

Snowflake and Databricks both operate across AWS, Microsoft Azure, and Google Cloud Platform, providing flexibility in cloud strategy and avoiding vendor lock-in at the infrastructure level.

This multi-cloud support enables organizations to optimize for specific cloud services, comply with data residency requirements, or implement disaster recovery strategies across multiple cloud providers.

4. Separation of Storage and Compute

Both platforms decouple storage and compute resources, allowing organizations to optimize each independently. Data can be stored cost-effectively, while compute resources can be scaled up or down based on processing requirements.

This separation enables multiple teams to access the same data using different compute configurations, supporting diverse workload patterns within a single organization.

5. SQL Support for Analytics

While Databricks started as a Spark-centric platform, both now provide robust SQL interfaces that support standard SQL queries and integrate with popular business intelligence tools. SQL support ensures compatibility with existing analytics workflows, making transitions such as a SQL Server to Snowflake migration more seamless for organizations already invested in SQL-based systems.

6. Enterprise-Grade Security

Both platforms offer comprehensive security features, including role-based access controls, encryption at rest and in transit, network isolation, and compliance certifications for regulations such as SOX, HIPAA, and GDPR.

A well-defined data governance strategy often complements these capabilities, ensuring security measures align with organizational policies and regulatory requirements.

Advanced security features, such as column-level security, dynamic data masking, and audit logging, meet the stringent requirements of highly regulated industries.

Databricks vs Snowflake – What’s the Difference?

Understanding the core differences between these platforms helps align your choice with specific business requirements and use cases, including:

Platform Type & Architecture

Databricks implements a lakehouse architecture that combines the flexibility of data lakes with the structure and performance of data warehouses. This unified approach handles both structured and unstructured data within a single platform, making it especially valuable for organizations considering a data warehouse to data lake migration as part of their modernization strategy.

Snowflake operates as a pure cloud data warehouse, explicitly optimized for structured and semi-structured data analytics. The platform focuses intensely on making SQL-based analytics fast, scalable, and accessible to business users.

The architectural difference creates a fundamental trade-off: Databricks offers more flexibility for diverse data types and processing patterns, while Snowflake provides superior performance and ease of use for traditional analytics workloads.

Data Processing & Performance

Databricks uses Apache Spark for distributed data processing, making it exceptionally well-suited for big data workloads that require complex transformations or real-time processing. As one of the leading big data platforms, it handles both batch and streaming data with the same processing engine, enabling consistent development patterns across different workload types.

Snowflake optimizes specifically for SQL query performance, with automatic query optimization, intelligent caching, and columnar storage designed to accelerate analytics workloads. The platform excels at handling high-concurrency scenarios where many users need simultaneous access to data.

For complex data processing involving machine learning, streaming analytics, or diverse data types, Databricks typically provides superior performance. For traditional business intelligence and SQL-based analytics, Snowflake often delivers faster query response times.

Machine Learning & AI

Databricks provides native machine learning capabilities through MLflow and integrated notebooks, creating a complete environment for the entire ML lifecycle. Data scientists can explore data, build models, track experiments, and deploy models to production within the same platform.

The collaborative notebook environment enables data scientists to work directly with raw data, eliminating the latency and complexity of moving data between systems for model development.

Snowflake takes an integration-focused approach to machine learning, connecting with external ML platforms like DataRobot, AWS SageMaker, and Azure Machine Learning. While Snowpark enables running Python and other languages within Snowflake, the platform lacks the comprehensive ML tooling that Databricks provides natively. However, organizations can still leverage Snowflake’s AI model capabilities through these integrations to support predictive analytics and automation use cases.

For organizations where machine learning represents a core capability, Databricks provides significant advantages. For companies that primarily need traditional analytics with occasional ML projects, Snowflake’s integration approach may suffice.

Ease of Use & SQL Support

Databricks targets technically sophisticated users, particularly data scientists and engineers comfortable with code-based interfaces. The notebook environment provides tremendous flexibility but requires programming skills to use effectively.

Recent improvements to Databricks’ SQL interface have made it more accessible to business analysts, but the platform remains primarily oriented toward technical users who can use its full capabilities.

Snowflake prioritizes ease of use for business analysts and SQL developers. The platform’s interface feels familiar to anyone who has worked with traditional databases, and with a wide range of Snowflake connectors available for BI and ETL tools, users can integrate data seamlessly and achieve immediate productivity without the need for extensive training.

Web-based administration tools, automatic optimization, and intuitive interfaces make Snowflake accessible to a broader range of users within an organization.

Pricing & Cost Efficiency

Databricks utilizes a consumption-based pricing model centered on Databricks Units (DBUs), which represent processing capacity. Costs scale with cluster size and runtime, making compute-intensive workloads potentially expensive. However, the platform’s ability to handle multiple workload types within a single environment can reduce overall infrastructure costs by eliminating the need for separate systems.

Snowflake’s per-second billing and automatic suspension features provide more predictable costs for analytics workloads. Compute resources automatically suspend when not in use, eliminating idle time charges. The separation of storage and compute enables organizations to store large amounts of data inexpensively, while only incurring compute costs when actively analyzing that data.

Ecosystem & Integrations

Databricks emphasizes open-source integration and standards, making it compatible with a vast ecosystem of Spark-based tools and libraries. Strong cloud platform integrations, particularly with Azure and AWS, use existing cloud services for identity management, security, and storage.

Snowflake has invested heavily in native connectors for popular business intelligence and ETL data transformation tools, reducing implementation complexity and accelerating time-to-value for common analytics use cases.

The platform’s data marketplace and sharing capabilities create unique integration opportunities that go beyond traditional data connections.

Security & Compliance

Both platforms provide enterprise-grade security features that meet the requirements of highly regulated industries. Role-based access controls, encryption, audit logging, and compliance certifications are standard on both platforms.

Neither platform has a significant security advantage; the choice typically comes down to how security features integrate with existing organizational policies and workflows.

Databricks vs Snowflake – Feature-by-Feature Comparison

| Feature | Databricks | Snowflake |

| Architecture | Lakehouse (unified data lake + warehouse) | Cloud data warehouse |

| Data Processing | Spark-based, handles batch & streaming | SQL-optimized, high concurrency |

| Machine Learning | Native MLflow, integrated notebooks | Integration with external ML platforms |

| Programming Languages | Python, R, Scala, SQL, Java | SQL, Python (via Snowpark), Java |

| Storage Format | Delta Lake, Parquet, JSON, etc. | Proprietary columnar format |

| Real-time Processing | Native streaming support | Limited streaming capabilities |

| User Interface | Notebooks, SQL editor | Web-based SQL interface |

| Auto-scaling | Cluster auto-scaling | Automatic compute scaling |

| Data Sharing | File-based sharing | Native secure data sharing |

| Pricing Model | DBU-based consumption | Per-second billing |

| Learning Curve | Steep for non-technical users | Gentle for SQL users |

Get expert guidance to choose the right platform for your business.

Databricks vs Snowflake – Pros and Cons

When evaluating Databricks and Snowflake, it’s essential to consider their strengths in relation to their limitations. Each platform excels in specific areas, and the right choice depends on your organization’s data strategy, skill sets, and use cases.

Databricks Pros

- Advanced AI and Machine Learning Capabilities: Databricks shines in AI-driven initiatives and advanced analytics. Its integrated environment bridges data preparation, model development, and deployment, reducing friction in ML workflows.

- Lakehouse Architecture for Flexibility: By combining data warehouse and data lake capabilities, Databricks can handle structured, semi-structured, unstructured, and streaming data, all within a single platform.

- High Customization and Integration: The platform offers deep flexibility, allowing teams to fine-tune performance and integrate with specialized tools to meet unique business needs.

Databricks Cons

- Steep Learning Curve: Since it is developer-focused, non-technical users often face challenges. Organizations may need skilled data engineers or invest in data engineering services to unlock the platform’s full potential.

- Less Optimized for Traditional Analytics: While powerful for data science, Databricks lacks the polished user experience of purpose-built data warehouse solutions, making it less intuitive for standard BI tasks.

- Cost Monitoring Required: Without active governance, compute-intensive workloads can quickly escalate costs, especially for teams new to big data processing.

Snowflake Pros

- User-Friendly and Accessible: Snowflake’s SQL-first approach makes it approachable for analysts and business users, enabling quick adoption without the need for extensive training. Organizations looking to fully leverage Snowflake’s capabilities often engage Snowflake modernization services to optimize performance, streamline workflows, and ensure best practices in data architecture.

- High Performance with Minimal Management: The platform delivers fast query performance, automatic optimization, and scalability, without the need for manual tuning.

- Strong Support for Structured Data: Snowflake excels in analytics, reporting, and dashboards, making it a top choice for organizations prioritizing BI and traditional data workloads.

Snowflake Cons

- Limited Native Machine Learning: Snowflake does not offer robust built-in ML tools, requiring integration with external platforms for advanced analytics.

- Dependency on Third-Party Tools: For more complex use cases, organizations often need additional technologies, which can increase cost and system complexity.

- Less Flexible with Unstructured Data: While strong in structured and semi-structured workloads, Snowflake is not ideal for organizations that need to process diverse data types, such as images, videos, or real-time streams.

Databricks vs Snowflake – Which One Should You Choose?

The decision between Databricks and Snowflake depends on your organization’s specific requirements, technical capabilities, and strategic priorities.

Choose Databricks for ML

Organizations should select Databricks when machine learning and AI represent core business capabilities rather than supplementary features. Companies building recommendation engines, fraud detection systems, or predictive analytics solutions benefit from the integrated ML environment.

Data engineering teams that process diverse data types or require real-time analytics capabilities will find Databricks’ flexibility and Spark-based processing advantageous.

Organizations with strong technical teams who value customization and control over their data processing environment often prefer Databricks’ flexibility and power, while others may rely on Databricks consulting services to fully leverage the platform’s advanced capabilities.

Choose Snowflake for Analytics

Snowflake excels when the primary use case involves traditional business intelligence and SQL-based analytics. Organizations needing to democratize data access across business users or support high-concurrency query workloads often find Snowflake data analytics more suitable.

Companies prioritizing ease of implementation and management, particularly those with limited data engineering resources, benefit from Snowflake’s automated optimization and simple administration, often supported by Snowflake consulting to accelerate adoption and best practices.

Organizations focused on structured data analytics, reporting, and dashboards typically achieve faster time-to-value with Snowflake’s purpose-built data warehouse architecture.

Real-World Use Cases

Examining how leading organizations use these platforms provides practical insights into their capabilities and limitations.

Databricks – Advanced ML, Streaming, and Real-Time Insights

Here are some of the leading examples that have used Databricks:

- Bayer to deploy Databricks to power their ALYCE analytics platform for clinical data, processing complex, regulated pharmaceutical data with machine learning and business intelligence capabilities to accelerate clinical trial reviews and drug development processes.

- The Texas Rangers capture player motion data at hundreds of frames per second, using Databricks for real-time data collection and analysis of athletic performance and injury risk. This enables coaches to make data-driven decisions during games and training sessions.

- HSBC used Azure Databricks and Delta Lake for mobile banking app analytics, reducing the complex analytics runtime from approximately six hours to just six seconds, which dramatically improved user engagement and operational efficiency.

- Shell processes sensor data from over 200 million valves using Databricks to anticipate equipment failures before they occur, enabling predictive maintenance strategies that improve safety and reduce operational costs.

Snowflake – Scalable Analytics, Dashboards & Storage

Here are some of the leading examples that have used Snowflake:

- NIB Group, an Australian health insurer, deployed Snowflake for centralized business intelligence, enabling analysts to query claims, policies, sales, and customer behavior data through Tableau with high scalability and smooth integration.

- Pfizer adopted Snowpark to accelerate its analytics processes by 4 times, while simultaneously reducing the total cost of ownership by 57%, highlighting the platform’s strengths in performance improvements and Snowflake cost optimization for large-scale analytics operations.

- Petco achieved 50% faster data processing and improved its data science team efficiency by 20% after migrating analytics workloads to Snowflake, showcasing the platform’s ability to enhance both technical performance and team productivity.

Hybrid – Both Platforms in Tandem

An enterprise financial services firm implemented a hybrid architecture using Snowflake for regulated reporting and real-time data warehousing while deploying Databricks for quantitative research and algorithmic trading. This approach enabled smooth bidirectional data flows with centralized governance, delivering 612% ROI over three years and over $21 million in net benefits.

A retail and logistics business uses Databricks to transform raw “bronze” data into curated “silver/gold” layers, then loads final aggregates into Snowflake for business intelligence dashboards. While this architecture delivers powerful capabilities and strong performance, organizations note that it can result in very high monthly costs.

Multiple organizations operate with a typical practitioner setup, utilizing Snowflake as the enterprise data warehouse for reporting and analytics, while using Databricks as the primary environment for machine learning pipelines and data science. The final results are written back into Snowflake for consumption by business analysts.

Our experts help you evaluate trade-offs and design the best-fit solution.

FAQs

What is the main difference between Snowflake and Databricks?

The fundamental difference lies in their architectural approach: Databricks provides a unified lakehouse platform that combines the flexibility of a data lake with the performance of a data warehouse, optimized for machine learning and diverse data processing. Snowflake operates as a pure cloud data warehouse focused specifically on SQL-based analytics and business intelligence use cases.

Can you use Snowflake and Databricks together?

Yes, many organizations successfully implement hybrid architectures using both platforms. Databricks typically handles data engineering, machine learning, and complex data processing, while Snowflake serves as the analytics layer for business intelligence and reporting. This approach requires careful data governance and pipeline management, but can optimize for different use cases.

Is Databricks more expensive than Snowflake?

Cost comparison depends heavily on usage patterns. Databricks’ DBU-based pricing can become expensive for compute-intensive workloads, but may provide better value for organizations consolidating multiple data processing needs. Snowflake’s per-second billing and auto-suspend features often result in more predictable costs for analytics workloads, making direct price comparisons difficult without specific usage analysis.

Is Databricks a data warehouse?

Databricks is a lakehouse platform that combines data lake and data warehouse capabilities rather than being a traditional data warehouse. While it can serve data warehouse use cases through its SQL interface and Delta Lake technology, it’s designed to handle broader data processing requirements, including machine learning, streaming analytics, and processing unstructured data.

What language does Snowflake use?

Snowflake primarily uses SQL as its query language, making it familiar to most database professionals and analysts. The platform also supports Python, Java, and JavaScript through Snowpark for more advanced processing, but SQL remains the primary interface for most users.

Which cloud platforms support Snowflake and Databricks?

Both platforms support multi-cloud deployment across Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). This multi-cloud support offers flexibility in cloud strategy, enabling organizations to select their preferred cloud provider or implement multi-cloud architectures for redundancy and optimization.

Is Snowflake replacing traditional data warehouses?

Snowflake represents the evolution of data warehouses for cloud environments rather than simply replacing traditional systems. Many organizations migrate from legacy data warehouses to Snowflake to reap the benefits of the cloud, including automatic scaling, reduced maintenance overhead, and pay-per-use pricing. However, the core concepts of data warehousing remain consistent.

Can Databricks connect to Snowflake?

Yes, Databricks provides native connectors for Snowflake, enabling smooth data movement between the platforms. Organizations commonly use Databricks for data processing and machine learning, while storing final results in Snowflake for analytics consumption, thereby creating integrated workflows between the two systems.

Conclusion

The choice between Snowflake and Databricks ultimately depends on your organization’s data strategy and technical priorities. Databricks is the stronger option when advanced machine learning, real-time processing, and complex data engineering are central to your operations.

At the same time, Snowflake delivers exceptional value for traditional analytics, reporting, and business intelligence. In many cases, enterprises achieve the best results through a hybrid approach, leveraging the strengths of both platforms to cover diverse data needs.

At Folio3, we help organizations design and implement tailored data strategies, whether that means optimizing Snowflake for analytics, unlocking the power of Databricks for AI-driven innovation, or integrating both into a unified ecosystem. Our experts ensure smooth adoption, cost efficiency, and scalability so you can focus on turning data into actionable insights.