A modern data platform (MDP) is a cloud-native architecture that brings together data ingestion, storage, processing, analytics, and governance into a unified system. Unlike older systems that struggle with volume and variety, modern platforms handle structured and unstructured data at scale while supporting real-time analytics and machine learning workloads.

The shift from traditional data warehouses to modern architectures started around 2015 when companies like Snowflake and Databricks introduced cloud-native solutions that separated compute from storage. The data lakehouse concept emerged, combining the flexibility of data lakes with the structure of warehouses.

Enterprises use modern data platforms to break down silos and make information accessible across departments. Startups benefit from the ability to scale without massive upfront infrastructure costs. Data teams get tools that reduce manual work, while business leaders gain access to insights that were previously locked away in disconnected systems.

Why Do Organizations Need a Modern Data Platform?

Legacy data systems weren’t built for today’s demands. As data volumes surge, scalability becomes a critical challenge. 64% of data leaders cited scaling as their top concern.

But volume is only part of the problem. Modern enterprises pull data from countless sources web, mobile, IoT, APIs, and social channels, making traditional ETL pipelines too slow and fragmented.

Speed matters more than ever. Retailers need dynamic pricing, banks must detect fraud in real-time, and healthcare providers require instant patient insights. Legacy batch systems simply can’t deliver.

Compliance pressures like GDPR and CCPA further expose the limitations of old platforms, which lack built-in governance and lineage tracking. On top of this, outdated cost models force companies to pay for peak capacity even during low demand.

A modern data platform solves these issues with cloud-native scalability, real-time analytics, robust governance, and cost efficiency in making it a strategic necessity, not just a tech upgrade.

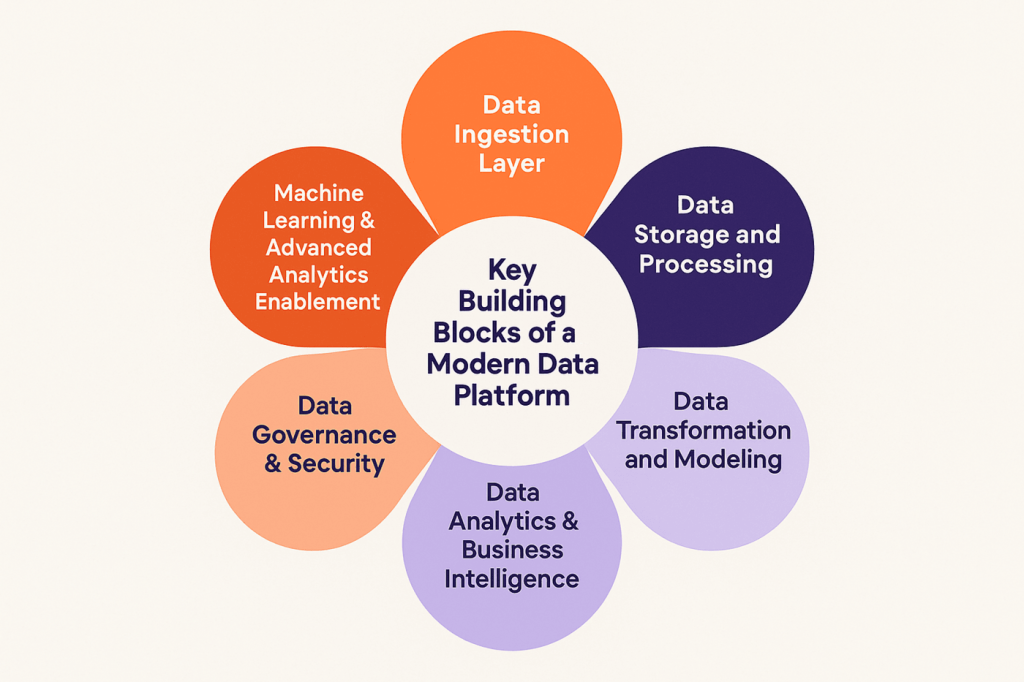

What Are the Core Components of a Modern Data Platform?

Building a modern data platform means understanding how different components work together to create a unified system. The core components of Modern Data Platform includes:

1. Data Ingestion Layer

The ingestion layer moves data from source systems into the platform. This includes batch processing for historical data and streaming data pipeline for real-time information. Tools like Apache Kafka handle high-throughput event streams, while Fivetran and Airbyte specialize in pulling data from SaaS applications through pre-built connectors.

Change data capture (CDC) has become essential for keeping data fresh without overloading source systems. Instead of full table refreshes, CDC tracks only what changed since the last sync. Debezium, an open-source CDC tool, can capture changes from databases like PostgreSQL and MySQL in near real-time.

2. Data Storage and Processing

Storage in modern platforms separates from compute, allowing each to scale independently. Object storage like Amazon S3 or Azure Data Lake Storage provides cheap, durable storage for any data type. Cloud data warehouses like Snowflake and BigQuery store structured data optimized for analytical queries.

The data lakehouse architecture combines these approaches. Databricks popularized this concept with Delta Lake, which adds ACID transactions and schema enforcement to data lake architecture storage. This means data teams can work with both raw and curated data in one place without moving it between systems.

Processing happens through distributed computing frameworks. Apache Spark remains popular for large-scale transformations, while modern SQL engines like Trino let analysts query data across different storage systems using familiar syntax.

3. Data Transformation and Modeling

Raw data rarely arrives in a format ready for analysis. The data transformation in ETL layer cleans, enriches, and structures data according to business logic. dbt (data build tool) changed how teams approach this by bringing software engineering practices to analytics.

With dbt, analysts write SQL transformations that version control tracks, automated tests validate, and documentation explains. This approach, called analytics engineering, bridges the gap between data engineering and business intelligence.

4. Data Analytics & Business Intelligence

The analytics layer turns processed data into insights through dashboards, reports, and ad-hoc queries. Modern BI tools like Tableau, Power BI, and Looker connect directly to cloud data warehouses, eliminating the need to extract data into separate analytical databases.

Self-service analytics has become a priority. Business users can explore data and build their own reports without waiting for IT. However, this requires good data modeling and governance to ensure people find accurate, trusted information.

5. Data Governance & Security

Governance ensures data quality, security, and compliance across the platform. A strong data governance strategy isn’t just about restricting access; it’s about making data discoverable and trustworthy while protecting sensitive information.

Data catalogs like Collibra and Alation create searchable inventories of available datasets with metadata, business definitions, and quality scores. Data observability tools like Monte Carlo monitor pipelines for anomalies and alert teams when something breaks.

Access control happens at multiple levels. Role-based permissions determine who can see what data. Column-level security masks sensitive fields like social security numbers. Row-level security filters data based on user attributes, so salespeople see only their region’s customers.

6. Machine Learning & Advanced Analytics Enablement

Modern platforms serve as the foundation for AI and machine learning initiatives. Feature stores organize and version the data features that feed ML models. MLOps tools automate model training, deployment, and monitoring.

Emerging capabilities like AI enterprise search are also enhancing how organizations retrieve and analyze insights, enabling teams to access relevant information instantly across vast, complex data environments.

Cloud providers offer managed ML services that integrate with data platforms. Amazon SageMaker, Google Vertex AI, and Azure Machine Learning let data scientists build models without managing infrastructure. These services pull data directly from cloud warehouses and lakes, eliminating manual data movement.

How Does a Modern Data Platform Differ from a Traditional Data Warehouse?

As organizations handle increasingly diverse and fast-moving data, traditional data warehouses are struggling to keep pace. While they remain effective for structured reporting and historical analysis, their limitations in scalability, flexibility, and cost-efficiency often restrict modern business needs.

A modern data platform (MDP) overcomes these challenges by embracing cloud-native architectures, real-time processing, and support for varied data types, making it a more future-ready solution. Here’s a side-by-side comparison:

| Aspect | Traditional Data Warehouse | Modern Data Platform |

| Architecture | On-premise or single-cloud, tightly coupled compute and storage | Cloud-native, decoupled architecture allowing independent scaling |

| Data Types | Primarily structured data requiring predefined schemas | Handles structured, semi-structured, and unstructured data with schema-on-read flexibility |

| Scalability | Vertical scaling with hardware limits and expensive upgrades | Elastic horizontal scaling that adjusts automatically to workload demands |

| Cost Model | High upfront capital expenditure with fixed capacity costs | Pay-per-use pricing based on actual consumption and usage patterns |

| Processing Speed | Batch processing with scheduled ETL jobs running periodically | Real-time streaming and batch processing supporting immediate insights |

7 Business-Critical Benefits of Modern Data Platforms

A modern data platform has become the backbone of digital enterprises, enabling agility, scalability, and smarter decisions. Unlike traditional systems, a modern data platform architecture integrates real-time analytics, automation, and governance, offering tangible business value across industries.

1. Faster, Data-Driven Decision Making

Speed matters when market conditions change quickly. Modern platforms reduce the time from data collection to insight from days to minutes. Instacart, for example, processes millions of customer transactions daily and uses real-time analytics to optimize delivery routes and inventory placement.

The ability to query current data changes how teams operate. Marketing can see campaign performance during the day and adjust spending before budgets waste on underperforming channels. Supply chain managers spot disruptions as they happen instead of discovering problems in next week’s report.

2. Operational Efficiency at Scale

Automation reduces the manual work that bogs down data teams. A single data engineer can manage pipelines that would have required a team of five in a traditional environment.

Tools like Airflow orchestrate complex workflows, dbt handles transformations, and cloud platforms manage infrastructure automatically. Optimizing a big data pipeline in this way ensures faster delivery, reduced errors, and consistent data quality at scale.

3. Improved Collaboration Across Teams

When everyone works from the same data, collaboration improves. Modern platforms create a single source of truth that all teams can access with appropriate permissions. Data scientists, analysts, and business users query the same datasets, reducing conflicts about which numbers are correct.

Shared data models and documentation make it easier for new team members to get productive quickly. At Spotify, their data platform serves over 2,000 employees across different functions, all working with consistent definitions of key metrics like monthly active users and listening hours.

4. Enhanced Customer Experiences

Understanding customers requires combining data from multiple touchpoints. Modern platforms unify web analytics, mobile app usage, purchase history, support tickets, and survey responses into complete customer profiles.

With the rise of generative AI for data analytics, businesses can now uncover deeper patterns in customer behavior and deliver even more personalized experiences.

Starbucks uses their data platform to power personalized recommendations in their mobile app. The system analyzes purchase patterns, location data, and preferences to suggest drinks and food items each customer might enjoy. This personalization contributed to mobile orders reaching 26% of U.S. transactions by 2023.

5. Scalability & Flexibility

Business growth shouldn’t require infrastructure overhauls. Modern platforms scale resources automatically based on demand. During peak periods like Black Friday, retail companies can handle 50x normal query volumes without manual intervention.

6. Trust, Security & Compliance

Regulations require companies to know where data lives, who accesses it, and how it’s protected. Modern platforms build these capabilities in rather than adding them as afterthoughts. Automated audit logs track every query and data access. Encryption protects data at rest and in transit.

Data lineage tools show how data flows through the platform, from source systems through transformations to final reports. When regulators ask questions or audits happen, teams can produce documentation quickly instead of spending weeks reconstructing data flows.

7. Innovation & Competitive Advantage

Companies that make better use of data pull ahead of competitors. Modern platforms enable experimentation that wasn’t practical before.

Data scientists can test hundreds of model variations using historical data and apply big data predictive analytics to uncover emerging trends, customer behaviors, and operational risks. Product teams can run A/B tests and analyze results in real-time to drive faster innovation.

How Do You Build Your Business’s Modern Data Platform?

Creating a modern data platform requires careful planning and execution. Here’s a practical roadmap that organizations can follow.

Step #1: Business Alignment

Start by understanding what problems the platform needs to solve. Talk to stakeholders across departments about their data pain points and desired outcomes. A common mistake is building infrastructure without clear use cases, resulting in expensive systems that don’t deliver value.

Document specific business objectives like reducing reporting time from 48 hours to 4 hours, enabling real-time inventory visibility, or supporting new ML-powered product features. These concrete goals guide technology choices and help measure success later.

Step #2: Data Landscape Assessment

Map existing data sources, volumes, and quality issues. Most organizations discover they have more data sources than expected and significant quality problems. An assessment might reveal that customer records exist in seven different systems with inconsistent formatting and duplicate entries.

Evaluate current skills and staffing. Does the team have cloud expertise? Are there people who understand data modeling? Identifying skill gaps early allows time for training or hiring before the platform goes live.

Step #3: Architecture Design

Choose the architectural pattern that fits your needs. A centralized data warehouse works for companies with primarily structured data and clear ownership. A data lakehouse suits organizations with diverse data types and multiple analytical use cases. Data mesh applies to large enterprises where different domains need autonomy over their data.

Select cloud provider and core technologies. Consider factors beyond features and pricing: existing cloud relationships, team expertise, regional data residency requirements, and integration with current tools. Companies often start with one cloud and adopt multi-cloud gradually as needs evolve.

Step #4: Pipeline Development

Build data pipelines incrementally through cloud data integration, starting with high-value use cases. Don’t try to migrate everything at once. Pick a department or business process where improved data access creates immediate value.

Implement proper error handling and monitoring from the beginning. Pipelines will fail; the question is whether teams know about failures immediately or discover them when reports look wrong days later. Tools like Airflow provide alerting capabilities that notify teams when jobs fail or take longer than expected.

Step #5: Governance & Security

Establish governance policies before opening access widely. Define data ownership, quality standards, and access control principles. Create a data catalog that helps people find and understand available datasets.

Security can’t be an afterthought. Implement encryption, access controls, and audit logging as part of the initial build. Getting security right from the start is easier than retrofitting it onto a platform already in use.

Step #6: Analytics & Insights

Deploy BI tools and train users on self-service analytics. Create starter dashboards and reports that demonstrate value quickly. Success breeds adoption; when one team sees benefits, others will want access.

Develop a semantic layer that provides business-friendly names and definitions for data. Instead of querying “tbl_cust_txn_dtl,” users work with “Customer Transactions” and “Purchase Date.” This abstraction layer protects users from database complexity and makes insights more accessible.

Step #7: Training & Scaling

Invest in training programs that match different roles. Business analysts need to learn BI tools and SQL basics. Data engineers require deeper training on pipeline development and cloud services. Executives benefit from understanding what the platform can do and how to ask better questions of data.

Plan for growth. As adoption increases, more data sources will need integration and more use cases will emerge. Build processes for evaluating and prioritizing new requests. Create a roadmap that balances quick wins with longer-term strategic initiatives.

Modern Data Platform Use Cases Across Industries

Different industries apply modern data platforms to solve sector-specific challenges.

1. Retail & E-Commerce

Retailers use modern platforms to unify online and in-store data, creating complete views of customer behavior across channels. With the help of predictive analytics in retail, brands can forecast demand, optimize stock levels, and anticipate customer preferences more accurately.

Target’s data platform processes point-of-sale transactions, website clicks, mobile app usage, and supply chain information to optimize inventory placement and personalize marketing.

Dynamic pricing represents another key use case. Amazon adjusts millions of prices daily based on competitor pricing, demand signals, and inventory levels. This requires processing vast amounts of data and making decisions in near real-time.

2. Healthcare & Life Sciences

Healthcare organizations face strict regulations about protecting patient data while needing to share information across providers. Modern platforms enable secure data sharing through proper access controls and audit trails.

Partnering with healthcare data analytics consulting experts helps organizations design compliant architectures and extract deeper insights from clinical and operational data.

Cleveland Clinic uses a modern data platform to analyze patient outcomes across different treatment protocols. By combining electronic health records, lab results, and imaging data, doctors can identify which approaches work best for specific patient populations.

3. Financial Services & Banking

Banks need real-time fraud detection that analyzes transaction patterns and identifies suspicious activity before money leaves accounts.

Modern data analytics for finance enables institutions like JPMorgan Chase to process hundreds of millions of transactions daily, applying machine learning models that flag potential fraud for investigation.

Regulatory reporting represents another critical application. Financial institutions must produce reports for multiple regulators with strict deadlines. Modern platforms automate much of this work, pulling data from various systems and formatting it according to regulatory requirements.

4. Manufacturing & Supply Chain

Manufacturers use predictive maintenance IoT sensors to monitor equipment performance and predict maintenance needs. A modern platform ingests sensor data, identifies anomalies that signal impending failures, and triggers work orders before breakdowns happen.

Siemens reported in 2023 that predictive maintenance powered by their data platform reduced unplanned downtime by 30% across manufacturing facilities. The system analyzes vibration patterns, temperature readings, and performance metrics to spot problems early.

5. Telecommunications & Media

Telecom companies analyze network performance data to optimize infrastructure investments and improve customer experience. Verizon’s platform processes billions of network events daily to identify coverage gaps and capacity constraints.

Streaming media companies like Disney+ use data platforms to understand viewing patterns and guide content decisions. What shows do people watch? When do they stop watching? This information influences which series get renewed and how content recommendations work.

What Technologies Power Modern Data Platforms?

Modern data platforms combine best-of-breed tools rather than relying on single-vendor stacks.

1. Data Ingestion & Integration

- Fivetran automates connector maintenance and schema drift handling for SaaS applications. The tool monitors source schemas and adjusts pipelines automatically when fields change.

- Apache Kafka handles high-throughput event streaming for real-time use cases, forming a core part of modern data ingestion architecture. Companies like LinkedIn (where Kafka originated) and Uber process millions of events per second through Kafka clusters.

- Airbyte offers an open-source alternative with community-built connectors. The platform has grown quickly since its 2020 launch, now supporting over 300 data sources.

2. Data Storage & Management

- Snowflake pioneered the cloud data warehouse category with automatic scaling and near-zero maintenance. Their architecture separates storage, compute, and services, allowing each to scale independently for efficient big data storage and management.

- Google BigQuery excels at ad-hoc analytics on large datasets through a serverless architecture. Users run queries without provisioning clusters or managing infrastructure.

- Databricks built their platform around Apache Spark and the lakehouse architecture. The Unity Catalog provides centralized governance across cloud data lakes and warehouses.

3. Data Processing & Transformation

- dbt transformed analytics engineering by bringing software development practices to SQL-based transformations. The tool now has over 30,000 companies using it according to their 2024 user conference.

- Apache Airflow orchestrates complex workflows with dependencies and error handling. It supports data engineering best practices by ensuring reliable, scalable, and well-managed data pipelines.

- Matillion provides a visual interface for building data pipelines that run on cloud data warehouses. This low-code approach makes pipeline development accessible to analysts without strong programming backgrounds.

4. Data Governance & Security

- Collibra offers enterprise data governance with workflow automation and policy enforcement. Their platform helps organizations manage data quality, privacy, and compliance requirements as part of a comprehensive data governance strategy.

- Monte Carlo pioneered data observability by applying concepts from software monitoring to data pipelines. The tool detects anomalies in data volume, freshness, schema, and distributions.

- Immuta simplifies data access control with policy-based automation. Instead of manually granting permissions, administrators define policies that automatically apply to new data as it arrives.

5. Analytics & Machine Learning

- Tableau remains the market leader in visual analytics, known for powerful visualizations and exploration capabilities. Salesforce acquired Tableau in 2019 for $15.7 billion, reflecting the strategic value of analytics tools.

- Looker introduced the concept of a semantic layer through LookML, allowing analysts to define metrics once and reuse them across reports. Google acquired Looker in 2020 and integrated it deeply with BigQuery.

- Amazon SageMaker provides end-to-end ML capabilities from data preparation through model deployment. The service integrates with AWS data services and supports predictive analytics techniques that help organizations extract deeper insights and forecast trends using historical data.

How Do Leading Modern Data Platforms Compare?

| Feature | Snowflake | Databricks | AWS Redshift | Google BigQuery | Azure Synapse |

| Primary Strength | Ease of use and multi-cloud support | Unified analytics and AI workloads | AWS ecosystem integration | Serverless analytics at scale | Microsoft ecosystem integration |

| Architecture | Centralized cloud data warehouse | Lakehouse on cloud storage | Columnar warehouse with optional lake integration | Serverless warehouse | Hybrid warehouse and lake |

| Best For | Companies wanting simplicity and avoiding vendor lock-in | Organizations with heavy ML and data science needs | AWS-native companies with existing infrastructure | Google Cloud users needing minimal management | Microsoft-centric enterprises |

| Pricing Model | Pay per second of compute usage | DBU-based pricing for compute | Hourly pricing with reserved instances | Pay per query with flat-rate options available | Pay-as-you-go with commitment discounts |

| Key Differentiator | True multi-cloud with consistent experience | Delta Lake and ML integration | Mature product with extensive AWS service integration | Separation of storage and compute with automatic scaling | Deep integration with Power BI and Azure services |

What Challenges Do Companies Face Building Modern Data Platforms?

While a modern data platform offers scalability, flexibility, and advanced analytics, organizations often face several roadblocks in design and adoption. Addressing these challenges early is essential to building a robust modern data infrastructure platform:

1. Data Quality & Integration Issues

Even the best modern data platform architecture fails if data quality is poor. Duplicate records, inconsistent formats, and outdated information undermine insights costing organizations an average of $12.9 million annually (Gartner, 2023). Integration adds complexity, as legacy systems lack modern APIs and require custom connectors or third-party tools to ingest data efficiently.

Many enterprises turn to data integration consulting to streamline these processes, ensure compatibility across systems, and accelerate data onboarding without disrupting existing workflows.

2. Scalability & Performance Bottlenecks

Not all cloud-native solutions scale seamlessly. Queries that work at 1TB may fail at 10TB without indexing and optimization. Unoptimized queries also drive up costs, and frequent runs can lead to unexpected spikes in cloud bills. Effective cost governance, query tuning, and monitoring are vital for sustainable big data implementation within a modern data platform strategy.

3. Governance, Security & Compliance

Balancing accessibility with security is critical. Overly strict controls limit productivity, while loose policies risk breaches. Data residency rules (GDPR, China’s data laws) add architectural constraints, dictating where and how data must be stored and processed within a modern data analytics platform.

4. Cultural & Organizational Resistance

Technical hurdles are often easier than cultural ones. Teams reliant on spreadsheets or siloed data may resist change. Overcoming this requires strong change management appointing champions, delivering quick wins, and training employees to adopt new practices.

How Does Folio3 Enable Modern Data Platform Success?

Building a modern data platform requires expertise across multiple domains. Folio3 Data Services brings experience from hundreds of data transformation projects across industries.

Strategic Consulting & Roadmap Design

Folio3 works with clients to define clear business objectives and translate them into technical requirements. Our consultants have seen common pitfalls and help organizations avoid expensive mistakes. We assess current capabilities, identify gaps, and create realistic roadmaps that balance quick wins with long-term goals.

End-to-End Data Integration

Our integration specialists handle complex data source connections, from modern SaaS APIs to legacy mainframe systems. We design pipelines that are reliable, scalable, and maintainable, supported by a robust data integration architecture. When sources change, our patterns make updates straightforward rather than requiring complete rebuilds.

Secure & Compliant Data Governance

Folio3 implements governance frameworks that meet regulatory requirements while enabling self-service analytics. We configure access controls, establish data quality monitoring, and create catalogs that make data discoverable. Our approach balances security with usability.

Advanced Analytics & BI Enablement

We help organizations move beyond basic reporting to advanced analytics. Through our comprehensive data analytics services, our team designs semantic layers, builds starter dashboards, and trains users on self-service tools. We focus on adoption, not just deployment, ensuring that every insight drives measurable business impact.

AI/ML Integration & Innovation

Folio3 bridges the gap between data platforms and AI initiatives. We build feature stores, implement MLOps practices, and integrate ML models into business processes.

Our AI data extraction solution enables organizations to automatically capture, process, and structure information from diverse data sources, ensuring that AI models are trained on accurate and relevant datasets. Our data scientists work alongside engineers to ensure models have the clean, timely data they need.

Ongoing Support & Scaling

Data platforms require ongoing attention as business needs evolve. Folio3 provides managed services that monitor platform health, optimize performance, and implement new features. Our support model scales with client needs, from occasional consulting to fully managed operations.

How Do You Measure Success & ROI of a Modern Data Platform?

Measuring the success of a modern data platform requires more than tracking technical upgrades, it’s about connecting platform performance to business outcomes. Organizations can evaluate ROI across three core dimensions: performance, adoption, and impact.

1. Performance & Efficiency

A well-implemented modern data infrastructure platform should deliver faster queries, fresher data, and more reliable pipelines. Emerging innovations like Agentic AI in data engineering are further improving efficiency by autonomously monitoring workloads, optimizing queries, and reducing manual intervention.

Moving from batch processing to real-time streaming reduces latency and improves decision-making speed. At the same time, total cost of ownership must be assessed, including cloud costs, licenses, and staff resources, and compared to legacy systems. Cost efficiency is a clear indicator of ROI, particularly when paired with expanded capabilities.

2. Adoption & User Engagement

Adoption reflects whether the platform delivers real value. Metrics like active users, query volumes, and dashboard creation show how widely the modern data analytics platform is embraced across teams. Higher engagement means the platform is empowering decision-makers. User satisfaction surveys add context measuring trust in data accuracy, ease of access, and whether employees recommend the platform to colleagues.

3. Business Impact & Outcomes

Ultimately, ROI is proven by measurable business results. Has sales forecasting accuracy improved? Has churn decreased? Has fraud detection reduced losses? Linking use cases to quantifiable outcomes like a 2% revenue boost from dynamic pricing or millions saved through predictive fraud detection demonstrates the tangible benefits of a modern data platform strategy.

What Trends Are Shaping the Future of Modern Data Platforms?

The future of the modern data platform is being reshaped by emerging technologies and evolving business needs. Several key trends highlight how modern data platform architecture is advancing toward greater intelligence, flexibility, and speed.

1. Generative AI & LLMs

Large language models are transforming how users interact with data. Instead of writing complex queries, business users will ask natural language questions and receive insights with visualizations.

Beyond analytics, generative AI implementation is accelerating modern data platform strategy by automating tasks like data preparation, pipeline creation, and model generation, reducing time-to-insight significantly.

2. Automated Data Governance

Governance is becoming smarter with AI-powered tools that automatically classify sensitive data, recommend access policies, and monitor anomalies. This automation strengthens compliance while reducing manual effort, ensuring secure and reliable modern data infrastructure platforms.

3. Edge & Real-Time Analytics

With growing IoT adoption, data is increasingly processed at the edge—closer to where it’s generated. This reduces latency, lowers bandwidth costs, and enables faster decision-making.

The benefits of real-time analytics are becoming more evident as businesses gain the ability to react instantly to market or operational changes, making it a default feature in modern data analytics platforms.

4. Composable Open-Source Platforms

The future favors modularity over monolithic systems. Companies are building modern data platforms from interoperable, open-source components like Apache Iceberg, enabling flexibility, reduced vendor lock-in, and easier integration with evolving technologies.

FAQs

What is a modern data platform and how does it work?

A modern data platform is a cloud-based architecture that ingests data from multiple sources, stores it in scalable repositories, processes and transforms it through automated pipelines, and makes it available for analytics and machine learning. The system works by separating storage from compute, allowing each to scale independently based on demand.

What are the key components of a modern data platform architecture?

Key components include data ingestion tools for collecting information from sources, cloud storage for raw and processed data, transformation engines for cleaning and modeling, analytics tools for visualization and reporting, and governance frameworks for security and compliance. These components integrate through APIs and shared metadata.

How does a modern data platform differ from a traditional data warehouse?

Modern platforms handle multiple data types including unstructured data, scale elastically in the cloud, support real-time processing alongside batch jobs, use pay-per-use pricing, and enable self-service analytics. Traditional warehouses typically require structured data, scale vertically with hardware limits, run scheduled batch jobs, involve high upfront costs, and depend on IT for most analytics.

What are the business benefits of implementing a modern data platform?

Organizations gain faster decision-making through real-time insights, reduced costs from efficient resource usage, improved collaboration via shared data access, better customer experiences from unified data views, scalability to handle growth, stronger governance for compliance, and the ability to innovate with AI and machine learning.

How does a modern data platform support AI and machine learning?

Modern platforms provide the data foundation ML models need through feature stores that organize training data, pipelines that keep data fresh, compute resources that scale for model training, and integration with ML frameworks. The platform handles data preparation and model deployment while maintaining governance over sensitive information.

How do data lakehouse and data mesh fit into modern data platform design?

Data lakehouse combines the flexibility of data lakes with the structure and performance of warehouses, allowing organizations to store all data types in one place. Data mesh distributes data ownership to domain teams while maintaining interoperability through shared standards. Both approaches work within modern platform architectures but suit different organizational structures and requirements.

What are the best practices for modern data platform governance?

Best practices include establishing clear data ownership, implementing role-based access controls, creating searchable data catalogs, monitoring data quality continuously, documenting data lineage, encrypting sensitive information, maintaining audit logs, and automating compliance checks where possible.

How can enterprises ensure security and compliance in a modern data platform?

Enterprises should encrypt data at rest and in transit, implement least-privilege access controls, monitor for suspicious activity, maintain comprehensive audit trails, classify sensitive data automatically, mask or tokenize personal information, establish clear data retention policies, and regularly test security controls.

What challenges do organizations face when adopting a modern data platform?

Common challenges include integrating diverse data sources, maintaining data quality across systems, managing costs as usage grows, overcoming cultural resistance to change, finding staff with needed skills, balancing accessibility with security, ensuring regulatory compliance, and avoiding vendor lock-in.

How to choose the right modern data platform technology stack?

Consider factors like existing cloud relationships, team expertise, data types and volumes, budget constraints, specific use cases, integration requirements with current tools, compliance needs, scalability requirements, and vendor stability. Most organizations benefit from starting with a core platform and adding specialized tools as needs become clear.

What are the top use cases for a modern data platform in business?

Popular use cases include customer analytics and personalization, real-time fraud detection, predictive maintenance, supply chain optimization, dynamic pricing, financial reporting and compliance, product recommendation engines, marketing attribution and ROI analysis, and operational dashboards.

Conclusion

A modern data platform is more than just a technology upgrade, it’s an enabler for scalability, innovation, and competitive advantage. Moving from traditional, on-premise systems to cloud-native modern data platform architectures empowers organizations to unlock real-time insights, streamline operations, and deliver superior customer experiences.

However, achieving success requires more than adopting tools. It demands a well-defined modern data platform strategy, careful execution, and strong governance to ensure long-term adaptability. Organizations that view their platform as a living system continuously evolving with business needs will gain the highest ROI.

This is where partnering with experts like Folio3 Data Services makes the difference. With proven experience in building modern data platforms, integrating advanced analytics, and enabling secure, compliant infrastructures, Folio3 helps businesses transform data complexity into actionable intelligence.

The data industry will keep evolving, but with the right foundation and a trusted partner, your organization can stay ahead of change and future-proof its data strategy.