Many organizations still rely on Microsoft SQL Server as the backbone of their data infrastructure. However, it’s becoming increasingly challenging to keep up. As datasets grow and real-time insights become business-critical, the limitations of on-prem SQL Server environments are more apparent: scaling is expensive, maintenance is time-consuming, and performance bottlenecks slow everything down.

According to Gartner, cloud adoption continues to surge, with public cloud services expected to hit nearly $723.4 billion in spending this year. That’s why many teams are now migrating from SQL Server to Snowflake—a cloud-native data platform designed for scalability, performance, and ease of use.

But moving to Snowflake isn’t just a lift-and-shift. Done right, it can unlock more intelligent analytics and lower costs. Done wrong, it can create more issues than it solves.

This blog walks you through SQL Server to Snowflake migration strategies, tools to consider (such as Snowpipe), and common pitfalls to avoid, so you can migrate with confidence and clarity.

What is MS SQL Server?

Microsoft SQL Server is a relational database management system (RDBMS) developed by Microsoft, primarily used to store, retrieve, and manage structured data. Widely adopted across industries, it supports T-SQL (Transact-SQL) for querying and managing databases and is often deployed on-premises as part of an enterprise’s IT infrastructure.

SQL Server has long been trusted for transaction processing, analytics, and business intelligence applications, particularly within Windows-based environments. It includes features like SSIS (Integration Services) for data migration, SSRS (Reporting Services) for report generation, and SQL Agent for job automation.

However, as data volumes increase and workloads become more complex, SQL Server can face limitations in terms of scalability, cost efficiency, and real-time processing, especially in legacy, on-premise deployments.

With growing demand for cloud-native performance and agility, many teams are evaluating whether it’s time to migrate from SQL Server to Snowflake for a more flexible and scalable data platform.

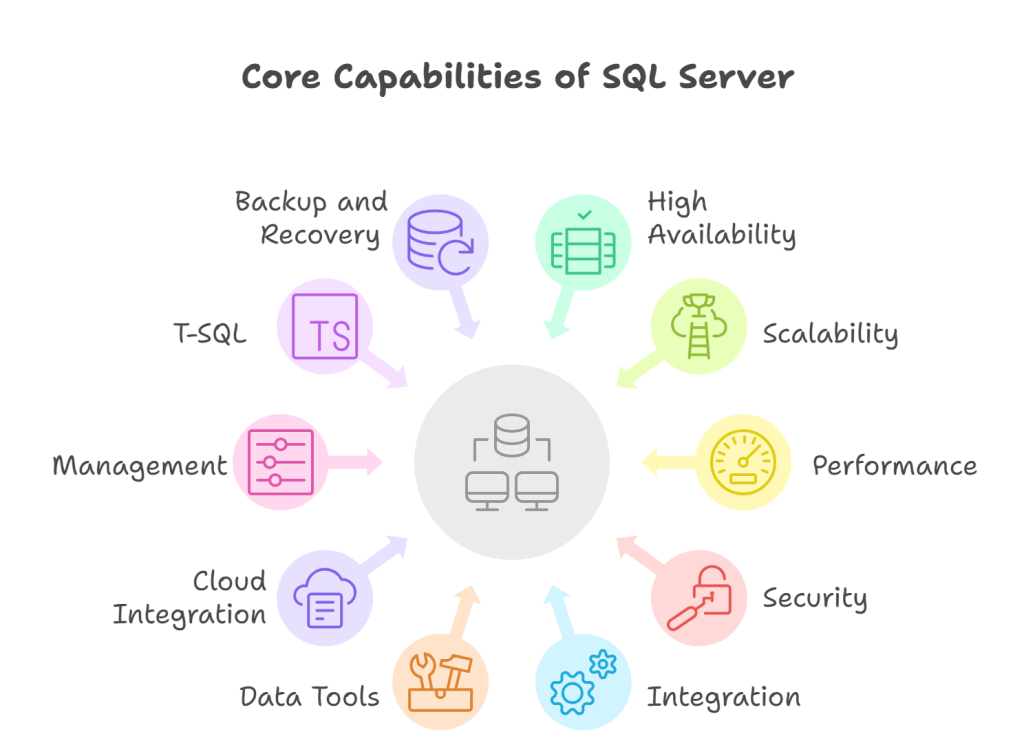

Key Features of SQL Server

Before diving into SQL Server to Snowflake migration, it’s worth understanding what SQL Server offers — and where it starts to fall short in today’s data demands.

Microsoft SQL Server is a trusted RDBMS, built to handle everything from transactional workloads to business intelligence at scale. While it’s a solid choice for many enterprises, its on-premises architecture and scaling limitations are often the very reasons teams begin exploring options like Snowflake as part of a modern data migration strategy.

High Availability

SQL Server offers failover clustering, database mirroring, and Always On availability groups to ensure systems remain operational. These features are crucial for reducing unplanned downtime, but they also introduce increased infrastructure and maintenance complexity.

Scalability

It supports both vertical and horizontal scaling, albeit with some friction. Expanding capacity often requires upgrading hardware, which can be costly and time-consuming.

Performance

Optimized for both OLTP and OLAP workloads, SQL Server effectively handles moderate query loads. However, large-scale analytics or heavy concurrency can strain performance, especially without constant tuning.

Security

Security is one of SQL Server’s strongest points, with encryption, role-based access, data masking, and auditing features that meet enterprise compliance requirements.

Integration

SQL Server integrates seamlessly with Microsoft services, including Azure, Power BI, and Excel. Its compatibility with SSIS, SSAS, and SSRS makes it valuable for full-stack Microsoft environments.

Data Tools

It includes a range of developer tools, such as SQL Server Management Studio (SSMS) and Data Tools for Visual Studio, supporting everything from ETL to data modeling.

Cloud Integration

With Azure SQL Database, organizations can deploy SQL Server in the cloud, but hybrid deployments can become expensive and complicated to manage compared to Snowflake’s cloud-native model, which also integrates seamlessly with Snowflake’s AI model to deliver more scalable and intelligent data solutions.

Management

SQL Server offers built-in automation, monitoring, and indexing tools. Still, maintaining high performance requires ongoing DBA oversight, especially at scale.

T-SQL

Transact-SQL (T-SQL) is SQL Server’s powerful procedural extension, enabling developers to write complex queries and stored procedures natively.

Backup and Recovery

SQL Server includes flexible backup, point-in-time recovery, and high-grade disaster recovery tools. But as data grows, so do storage costs and backup complexity.

What is Snowflake?

Snowflake is a cloud-native data platform that rethinks how businesses store, access, and use data at scale. Unlike traditional databases, it separates storage and compute, allowing companies to scale resources independently based on their workload. This architecture has made it a go-to choice for organizations dealing with rapidly growing data and unpredictable query demands.

According to a study, businesses using Snowflake saw a 612% ROI over three years, primarily due to faster analytics and reduced data management overhead. The platform supports a wide range of workloads, including data warehousing, data lakes, data engineering, and even AI/ML, all within a single SQL interface.

Because it’s cloud-agnostic, Snowflake runs on AWS, Azure, and Google Cloud, enabling teams to avoid vendor lock-in and collaborate easily across regions. Features like time travel, automatic scaling, and role-based security appeal to both data engineers and finance teams. Many organizations also turn to Snowflake consulting services to accelerate implementation, optimize performance, and align the platform with their specific business goals.

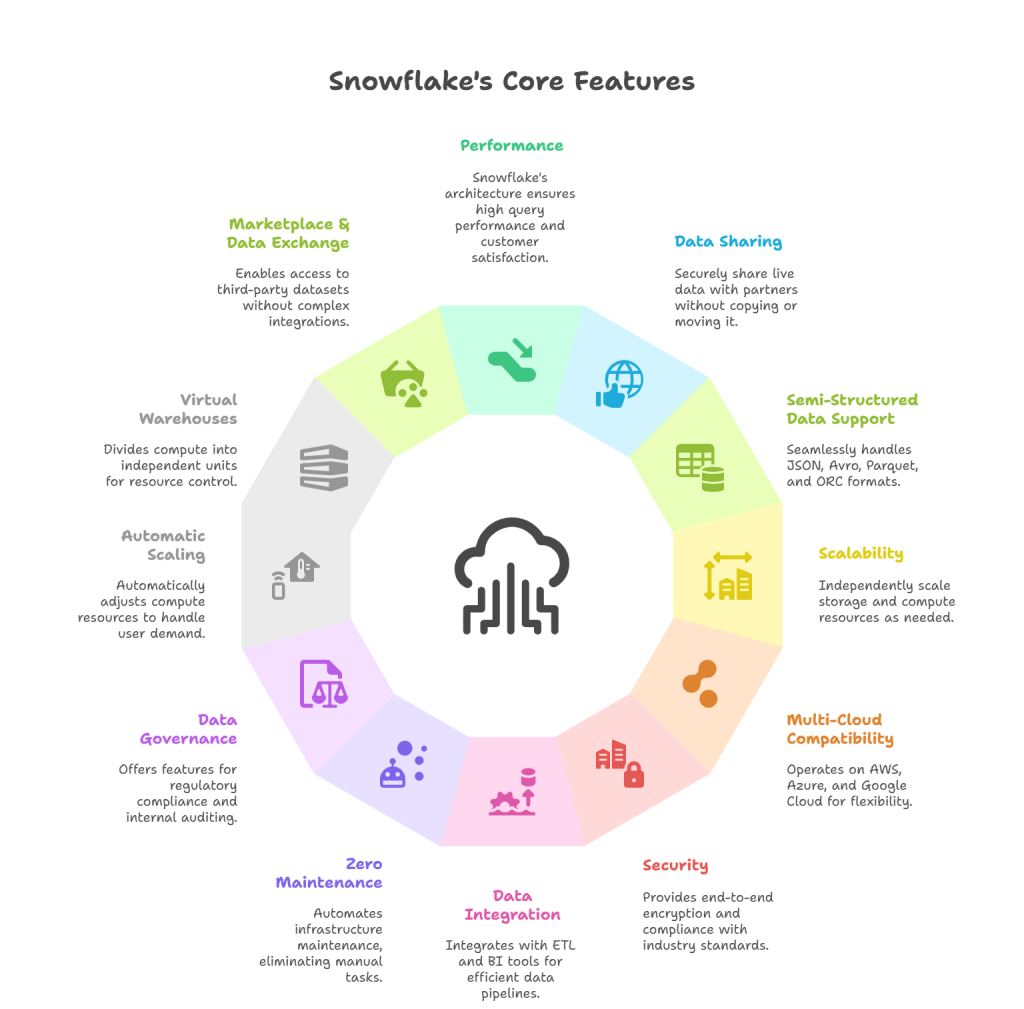

Key Features of Snowflake

Snowflake isn’t just another cloud data platform—it’s a reimagined model for how modern organizations store, process, and use data at scale. Below are some of the key Snowflake features that make it an attractive destination for those planning a migration from SQL Server to Snowflake.

Performance

Snowflake automatically optimizes query performance behind the scenes using its multi-cluster architecture and result caching. According to the 2023 Dresner Advisory report, Snowflake ranks among the top platforms in customer experience and performance satisfaction.

Data Sharing

Snowflake’s Secure Data Sharing allows organizations to instantly share live data with partners and vendors without copying or moving it, something that’s not natively possible in traditional SQL Server environments.

Support for Semi-Structured Data

With native support for JSON, Avro, Parquet, and ORC formats, Snowflake handles semi-structured data with the same ease as structured tables using standard SQL.

Scalability

Because storage and compute are separate, businesses can scale each independently—a core advantage of the Snowflake architecture. This represents a significant improvement over the fixed capacity constraints of traditional on-premise databases like SQL Server.

Multi-Cloud Compatibility

Snowflake runs on AWS, Azure, and Google Cloud, providing companies with flexibility in choosing providers or operating in multi-cloud environments, a capability highlighted in Gartner’s Magic Quadrant for Cloud DBMS.

Security

Snowflake includes end-to-end encryption, multi-factor authentication, and role-based access controls, and is compliant with standards such as HIPAA, PCI DSS, and SOC 2 Type II.

Data Integration

Snowflake integrates smoothly with ETL tools like Fivetran, Talend, and dbt, as well as BI platforms such as Tableau and Power BI. This simplifies both real-time and batch data ingestion pipelines, making Snowflake data integration seamless and efficient across various tools and workflows.

Zero Maintenance

Unlike SQL Server, Snowflake does not require indexes, statistics, or tuning. All infrastructure maintenance patching, upgrades, and scaling is handled automatically.

Data Governance and Access Control

Snowflake offers detailed governance features, including object tagging, access history, and dynamic data masking to support regulatory compliance and internal auditing. These capabilities make it easier for organizations to implement a robust data governance strategy that ensures visibility, control, and accountability across all data assets.

Automatic Scaling and Concurrency

When many users or queries hit the system, Snowflake automatically spins up additional compute clusters, reducing query wait times without manual intervention.

Virtual Warehouses

Compute in Snowflake is divided into independent units called Virtual Warehouses, which can be started, resized, or paused based on workload, allowing for fine-tuned resource usage and cost control.

Marketplace & Data Exchange

Snowflake’s Data Marketplace allows organizations to discover and use third-party datasets without complex integrations, unlocking new analytics opportunities with minimal setup.

What are the Methods to Connect SQL Server to Snowflake?

Moving from SQL Server to Snowflake isn’t a one-size-fits-all process. The right connection method depends on your team’s tech stack, the volume of data, update frequency, and desired level of automation. Below are four standard methods for migrating or replicating data from SQL Server to Snowflake, each with its advantages and considerations.

Method 1 – Using Hevo Data to Connect SQL Server to Snowflake

Hevo Data is a no-code data pipeline platform that supports real-time data replication from Microsoft SQL Server to Snowflake. It automatically detects schema changes, supports incremental loading, and has built-in error handling.

How does it work?

- Choose SQL Server as the source and Snowflake as the destination.

- Configure credentials and select tables to sync.

- Enable real-time sync for ongoing replication.

This method is ideal for teams that want to avoid managing infrastructure or building their own ETL process. Hevo also provides data transformation capabilities and detailed monitoring dashboards.

Method 2 – Using SnowSQL to Connect Microsoft SQL Server to Snowflake

SnowSQL is Snowflake’s command-line client, allowing you to connect and load data using SQL queries and scripts. While SnowSQL itself doesn’t directly connect to SQL Server, you can export data from SQL Server into flat files (like CSVs), then use SnowSQL to load them into Snowflake.

Steps involved

- Use BCP or SQL Server Management Studio to export data from SQL Server

- Transform the data if necessary (via Python or bash scripts).

- Use SnowSQL to upload and execute COPY INTO commands from a Snowflake stage.

This method gives you control but requires manual scripting and scheduling. It’s best suited for periodic batch jobs.

Method 3 – Using Custom ETL Scripts

Some organizations prefer to write their own ETL pipelines using Python (with libraries such as pyodbc or pymssql) or tools like Apache NiFi, Airflow, or Talend.

Steps involved

- Establish a connection to SQL Server using a driver.

- Extract and transform data using custom logic.

- Connect to Snowflake via the snowflake-connector-python or JDBC.

- Load data using the PUT and COPY INTO commands.

This approach offers flexibility for complex transformations, but it also requires dedicated engineering resources and ongoing monitoring for potential failures.

Method 4 – SQL Server to Snowflake Using Snowpipe

Snowpipe is Snowflake’s continuous data ingestion service. While it doesn’t directly pull from SQL Server, you can automate the pipeline by:

- Exporting SQL Server data into cloud storage (e.g., Amazon S3).

- Formatting the output as CSV or JSON.

- Setting up Snowpipe to automatically ingest data as new files land.

This approach leverages Snowflake data ingestion capabilities to streamline the flow of information. Pairing it with a scheduled SQL Server export script can simulate near-real-time replication, making it ideal for event-driven or micro-batch ingestion needs.

Best Practices for a Successful Migration

A smooth SQL Server to Snowflake migration takes more than just copying data between systems. Without the proper groundwork, businesses risk facing performance issues, cost overruns, or gaps in data governance. The practices below are based on common challenges organizations encounter when migrating enterprise databases to Snowflake, with a focus on ensuring scalability, governance, and Snowflake performance optimization from the start.

1. Data Governance

Before initiating the migration, it’s critical to take inventory of your data: what exists, who owns it, and how it’s used. Ensure that sensitive information is appropriately tagged and that access control policies are reviewed and mapped to Snowflake’s role-based access control (RBAC) system. Snowflake supports dynamic data masking and object tagging, which can help organizations maintain compliance with frameworks such as GDPR and HIPAA.

Tip: Establish data classification guidelines early so that new data follows consistent governance after migration.

2. Performance Tuning

A direct lift-and-shift approach often results in poor performance. SQL Server and Snowflake handle computation differently. Queries that perform well in SQL Server might not translate directly. Analyze your SQL Server execution plans and refactor them using Snowflake best practices, such as optimizing virtual warehouse sizes and using result caching efficiently.

Tip: Run side-by-side performance benchmarks before switching production workloads.

3. Cost Management

Snowflake utilizes a pay-per-second compute model, which can either save money or create unexpected expenses if not adequately monitored. Break your workload into logical groups and assign them to different virtual warehouses. Consider setting auto-suspend and auto-resume for each warehouse to prevent unnecessary billing during periods of inactivity. Incorporating cost-efficient Snowflake reporting into your monitoring strategy can also help track usage trends and ensure spend transparency across teams.

Tip: Use Snowflake’s resource monitors to set spending limits and alerts. Many businesses reduce compute costs by over 30% within the first 60 days simply by optimizing usage schedules.

4. Training and Documentation

SQL Server DBAs and analysts may not be familiar with Snowflake’s cloud-native structure. Invest in team training to cover key concepts like multi-cluster compute, Snowpipe, SnowSQL, and time travel. Update your internal documentation to reflect new processes, access controls, and data pipelines.

Tip: Keep a migration runbook that includes data validation checks, rollback options, and contact information for each migration phase—crucial steps in any Snowflake data engineering workflow.

Common Microsoft SQL Server to Snowflake Migration Challenges

Migrating from Microsoft SQL Server to Snowflake might seem like a technical upgrade, but it often introduces new problems that aren’t obvious at first glance. Organizations hoping to reduce costs or scale analytics often hit roadblocks if they overlook compatibility or architectural differences.

Below are some of the most common challenges to watch out for when you migrate SQL Server to Snowflake:

1. Data Type Mismatches

SQL Server supports a wide range of proprietary data types that don’t map directly to Snowflake. For instance, DATETIMEOFFSET, TEXT, or MONEY may require conversion into Snowflake-compatible formats, such as TIMESTAMP_TZ or NUMBER. Failing to address these mismatches early on can lead to data corruption or failed pipeline loads.

Tip: Use a data profiling tool before migration to identify incompatible columns and validate mappings post-load.

2. Stored Procedure Conversion

Snowflake’s support for stored procedures is limited compared to T-SQL in SQL Server. Many business processes rely heavily on T-SQL features, such as cursors, temporary tables, and error handling, which Snowflake handles differently through JavaScript-based procedures. This often requires partial or complete rewrites.

Tip: Prioritize business-critical procedures and evaluate them for re-architecture instead of direct conversion.

3. Performance Issues

One of the main goals of moving to Snowflake is improved performance, but that doesn’t happen automatically. Unlike SQL Server, Snowflake separates compute from storage. Without configuring virtual warehouses properly or understanding query pruning, performance can suffer. Migrated queries often require tuning to match Snowflake’s parallel execution engine.

Tip: Monitor query execution using the Query Profile and adjust warehouse sizing based on actual workload patterns.

4. Change Management

A successful SQL Server to Snowflake migration also depends on how prepared your people and processes are. New data ingestion methods, warehouse usage patterns, and cost models mean teams have to adapt quickly. Without proper onboarding and documentation, old habits from on-prem SQL Server environments can lead to inefficient Snowflake usage. Partnering with Snowflake migration services can help ensure teams are properly trained, aligned with best practices, and set up for long-term success.

Tip: Develop a comprehensive change management plan that encompasses training, role mapping, and post-migration audits to ensure a seamless transition.

SQL Server to Snowflake Migration: Success Stories Across Industries

1. Real-Time Automotive Data Processing – Snowflake in Action

One of the more compelling examples of using Snowflake during SQL Server to Snowflake migration comes from Aiden, a California-based startup co-founded by leaders from Volvo Cars.

Problem

Aiden needed to enable real-time vehicle communication using massive volumes of data generated by automotive IoT devices. The problem? Their systems had to handle structured and unstructured data at scale, ensure compliance, and deliver fast, actionable insights—all in real-time.

Solution

To meet these demands, Folio3 implemented a cloud-first architecture that included Snowflake Data Warehouse for secure, scalable, and performance-driven data management. Snowflake’s native support for semi-structured data (like JSON), combined with automated scaling and built-in governance, made it a natural fit for the project.

Result

As a result, Aiden could securely analyze live driving data, detect anomalies, and support smarter traffic decision-making—all without worrying about infrastructure overhead.

This use case highlights the value of migrating from SQL Server to Snowflake, particularly for organizations that deal with real-time data from edge devices. Snowflake’s cloud-native model enabled Aiden to process high-velocity data, maintain compliance, and reduce operational friction—an outcome that would have been more challenging to achieve on traditional platforms like SQL Server.

2. Scaling Oil & Gas Analytics – Snowflake’s Role in Schlumberger’s Data Modernization

One of the most impactful transformations involving Snowflake emerged during the SQL Server to Snowflake migration efforts at Schlumberger (SLB), the world’s largest offshore drilling company.

Problem

Schlumberger faced increasing challenges in managing and analyzing massive datasets from diverse sources like SAP, SQL Server, legacy systems, and IoT platforms. The existing infrastructure was unable to scale, resulting in inconsistent data, integration delays, and limited real-time analytics—critical shortcomings for a global energy leader.

Solution

To address these challenges, Folio3 provided end-to-end data engineering services, designing and implementing a cloud-first architecture with Snowflake as the foundation for a scalable, unified data warehouse. Snowflake’s separation of storage and compute enabled Schlumberger to ingest structured and semi-structured data at scale, while also preparing its infrastructure for AI/ML workloads through seamless integration with Azure Databricks.

Result

With Snowflake in place, Schlumberger achieved faster data access, real-time analytics, and centralized governance across its global operations. The transition from SQL Server enabled consistent data integration, high performance at scale, and a significant reduction in reporting times, from minutes to milliseconds.

This use case demonstrates how Snowflake empowers enterprise-grade analytics in data-intensive industries, such as oil and gas. For Schlumberger, moving away from SQL Server was not just an upgrade. It was a strategic shift toward a high-speed, cloud-native future powered by Snowflake.

3. Smart Pet Health at Scale – Snowflake’s Impact on Kinship’s Data Architecture

A standout example of Snowflake’s versatility occurred during Kinship’s platform modernization—a Mars Petcare division that transformed IoT data into actionable pet health insights.

Problem

Kinship’s Pet Insight platform captured massive volumes of activity data from IoT-enabled pet collars. However, their infrastructure struggled with historical data retrieval, duplication issues, and a lack of scalable analytics capabilities—all of which limited the ability to gain timely insights and drive future innovation.

Solution

To address this, Folio3 provided Snowflake modernization consulting services and incorporated Snowflake into Kinship’s evolving data stack, enabling fast, secure, and scalable analytics. Snowflake’s separation of compute and storage allowed Kinship to centralize clean, deduplicated data from multiple sources, including Databricks and AWS S3, without performance degradation. Snowflake’s zero-copy cloning and time travel features further support faster testing, auditing, and versioning.

Result

With Snowflake, Kinship achieved near-instant access to long-term historical pet data and eliminated redundant processing. This enabled data scientists to build more accurate models while dramatically reducing time-to-insight. Snowflake’s elasticity also ensured the platform could scale effortlessly as pet data volumes grew.

This use case demonstrates how Snowflake helps pet health innovators, such as Kinship, overcome scale and complexity. By migrating away from rigid, siloed systems, they unlocked powerful, cloud-native analytics that supported better decision-making for both the business and pet care.

SQL Server to Snowflake Migration – Key Takeaways

Migrating from SQL Server to Snowflake helps organizations modernize their data stack by improving scalability, performance, and analytics flexibility. Snowflake’s cloud-native architecture, separation of compute and storage, and support for real-time and batch ingestion enable faster insights with lower operational overhead. By following the right migration strategies, tools, and best practices, businesses can reduce costs, overcome legacy limitations, and build a future-ready analytics platform.

FAQs

Why should I migrate from SQL Server to Snowflake?

Snowflake offers better scalability, performance, and cost-efficiency with its cloud-native architecture. It eliminates infrastructure management while enabling real-time analytics and secure data sharing.

Is Snowflake compatible with my existing SQL Server data?

Yes, Snowflake supports structured and semi-structured data formats, making it fully compatible with SQL Server datasets through various migration tools and connectors.

What’s the best way to move data from SQL Server to Snowflake?

The recommended method is to use a combination of ETL tools, such as Fivetran or Matillion, or custom pipelines with Snowflake’s Snowpipe or bulk loading via Stage and COPY commands.

Can SQL be used for Snowflake?

Absolutely. Snowflake supports ANSI-standard SQL, allowing users to write queries, build views, and perform analytics using familiar SQL syntax.

Conclusion

Migrating from SQL Server to Snowflake offers significant advantages in scalability, performance, and cost-efficiency, but success depends on strategic execution. From selecting the right tools to implementing effective governance and cost controls, every step plays a crucial role.

Snowflake’s modern architecture can future-proof your data stack, but a poorly planned migration can introduce more problems than solutions. That’s where expert guidance helps.Partner with Folio3’s Data Services to ensure a seamless, secure, and optimized migration tailored to your business goals. From planning to execution, we help you unlock the full potential of Snowflake.