The world of data management is evolving rapidly, and organizations increasingly seek flexible, scalable solutions to handle vast amounts of data. The shift from traditional data warehouses to modern data lakes represents a fundamental change in how businesses manage, store, and analyze their data.

Migrating from a data warehouse to a data lake offers significant opportunities for better data storage and analysis capabilities but also requires careful planning and execution. Data management has evolved from basic databases and warehouses to more flexible and scalable solutions like data lakes.

Data warehouses have long served as centralized repositories for structured data, used primarily for reporting and analytics. However, as data volume, variety, and velocity increase, warehouses need more scalability, cost efficiency, and flexibility.

On the other hand, data lakes offer a solution that can store both structured and unstructured data in its raw form. This is crucial in today’s data-centric world, where businesses must make sense of large-scale, real-time data for competitive advantage.

Why Migrate from a Data Warehouse to a Data Lake?

Migrating from a data warehouse to a data lake is becoming more common as organizations seek solutions that can handle the increasing volume, variety, and complexity of modern data.

While data warehouses are excellent for structured data, their rigid architecture makes it difficult to process semi-structured and unstructured data.

Here are some compelling reasons to consider the migration:

1. Increased Data Variety: Modern businesses collect data from multiple sources – from traditional databases to social media, IoT devices, and sensors. A data lake can store all data types, whereas a data warehouse is often limited to structured data. This is where a comprehensive data lake strategy becomes essential.

2. Scalability: As data volumes grow exponentially, data lakes allow for scaling up storage without the constraints typical of data warehouses. A well-designed data engineering service can help streamline this scalability.

3. Cost-Effectiveness: Data lakes offer more cost-effective storage, especially for unstructured or raw data that does not need immediate processing. A data warehouse strategy often involves higher costs due to its structured nature.

4. Advanced Analytics: Modern analytical tools often work better with raw or semi-processed data. A data lake can store the data in its native format, enabling advanced AI, ML, and real-time analytics.

Let’s transfer your warehouse into a flexible data lake for scalable storage and faster insights.

Benefits of Moving from a Data Warehouse to a Data Lake House

Data lake houses combine the benefits of data lakes and warehouses, offering unified storage for structured and unstructured data while supporting advanced analytics. Some of the key benefits include:

1. Unified Data Storage

A data lake house provides a single repository for storing all data types—structured, semi-structured, and unstructured. This eliminates the need for multiple data storage solutions and provides a more holistic view of data across the organization.

2. Cost Efficiency

Data lakes utilize cost-effective, scalable storage, making them more economical for storing large volumes of raw data. According to a Gartner report, businesses can reduce data storage costs by up to 70% by switching to a data lake architecture, especially for unstructured data.

3. Scalability

Data lakes offer virtually limitless storage scalability. Businesses that generate massive data volumes benefit from the ability to scale horizontally without the storage limitations often seen in traditional data warehouses.

4. Flexible Data Formats

Unlike data warehouses that require data to be structured in a predefined schema, data lakes support various formats, including JSON, XML, Parquet, and ORC. This flexibility enables organizations to store data without transformation, allowing future use in diverse analytics or machine learning models.

5. Interoperability with Modern Tools

A modern data lake is compatible with contemporary data processing frameworks like Apache Spark, Hadoop, and Presto. These tools help process data more efficiently and allow seamless integration of AI and machine learning workflows. Leveraging data integration engineering services ensures optimal setup and management of these frameworks for enhanced performance and scalability.

Data Lake Migration Services

Data Strategy and Assessment

Analyze your current data architecture, assess migration readiness, and develop a comprehensive data strategy that aligns with your business objectives.

Data Integration and Ingestion

Design and implement ETL/ELT pipelines, enable real-time and batch data ingestion, and integrate diverse data sources seamlessly into the data lake.

Migration Execution

Ensure a seamless transition with phased or full migration strategies, data validation, and testing to maintain integrity and minimize risks.

Post-Migration Support and Optimization

Optimize performance, monitor and maintain infrastructure, and plan for scalability to support future growth and big data use cases.

Data Visualization and Insights

Integrate with BI tools like Tableau or Power BI and create custom dashboards to turn your data into actionable insights.

Key Considerations Before Migration

Migrating from a data warehouse to a data lake is a significant undertaking that requires careful planning. Engaging data lake consultants can help address critical factors such as data architecture, integration, and scalability, ensuring a smooth and efficient migration process.

1. Data Architecture and Integration

A business’s data architecture forms the backbone of its migration strategy. Shifting to a data lake requires understanding how data flows through your systems, how it’s currently stored, and how it will be integrated post-migration.

Current Architecture

Before proceeding, assess your existing data warehouse architecture. What types of data are you storing, and how is it being accessed? Understanding your workflows ensures a smoother transition to a data lake’s flexible and scalable structure, which is ideal for big data implementation.

Work with Files vs. a Database

Unlike traditional databases, data lakes work predominantly with raw files, often stored in JSON, Parquet, or ORC formats. You’ll need to decide whether your organization will work directly with these files or if you’ll layer a database system over the lake for more structured queries and analytics.

Choose Your Open-Source File Format

Selecting the right file format for your data lake is critical for performance and analytics. Formats like Parquet and ORC are optimized for big data processing, enabling faster querying and analysis, especially when dealing large datasets. The right format will depend on the type of data you store and your analytical requirements.

Data Lake Architecture

A well-structured data lake architecture ensures efficient data storage, retrieval, and security. Define the structure of your lake to accommodate raw, processed, and curated data, ensuring that your architecture is scalable and flexible enough to handle future data growth and emerging technologies.

Integration Tools

Data lakes often integrate with multiple data sources, including IoT devices, databases, and external data streams. It’s essential to choose robust integration tools to handle data ingestion at scale, whether through batch processing or real-time streaming.

Tools like Apache Kafka, AWS Glue, and Azure Data Factory can help manage data ingestion and integration efficiently.

ETL/ELT Transformation

Deciding between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) models is essential when designing your data pipeline. ELT is often more suited for data lakes, as it allows for storing raw data and transforming it as needed for analysis. Choose the approach that aligns with your business’s processing speed, complexity, and cost needs.

2. Migration Strategy

An effective migration strategy is critical for a seamless transition from a data warehouse to a data lake. It should be designed to minimize risk, reduce downtime, and avoid data loss.

Phased Migration vs. Full Migration

Organizations must choose between a phased or full migration strategy. A phased approach allows for incremental data migration, testing, and adjustments. In contrast, a full migration involves moving all data and workloads simultaneously.

Phased migrations offer less risk, while full migrations provide faster overall implementation.

Hybrid Architecture

In some cases, a hybrid approach can be adopted, where the data warehouse and data lake coexist. This allows for leveraging a data warehouse’s structured reporting capabilities alongside a data lake’s flexibility and scalability. A hybrid architecture is beneficial when immediate migration is not feasible for all data types or workloads.

Stop Copying Data Everywhere

One key advantage of a data lake is eliminating redundant data copying. A well-architected data lake centralizes data storage, allowing multiple teams to access the same data without creating duplicate copies, reducing storage costs and ensuring data consistency.

Fallback and Rollback Plan

No migration strategy is complete without a fallback or rollback plan. In case of unforeseen challenges or failures during the migration process, having a clear plan to revert to the previous system ensures minimal disruptions to business operations.

Be prepared to temporarily roll back or switch to a hybrid model to avoid data loss and maintain continuity.

3. Data Volume and Type

One of the main reasons organizations migrate to data lakes is the flexibility to handle different data types at scale. Unlike traditional data warehouses that primarily store structured data, data lakes are designed to store structured, semi-structured, and unstructured data in its raw form. Here’s what to expect when it comes to data volume and types:

Expect Structured, Semi-Structured, and Unstructured Data

1. Structured Data: This is organized, clearly defined data that fits into traditional rows and columns, like relational databases. Examples include sales records, customer details, and inventory databases.

2. Semi-Structured Data: This data doesn’t fit neatly into relational tables but still has some organizational properties. Examples include JSON files, XML files, and CSVs. These are increasingly common in modern business environments, and data lakes are particularly adept at handling them.

3. Unstructured Data: Unstructured data includes everything that doesn’t have a predefined structure, such as images, video, audio, social media posts, or IoT sensor data. In the age of big data, organizations are seeing a massive influx of unstructured data that needs to be stored and analyzed effectively.

A key advantage of data lakes is their ability to store massive amounts of these data types without the rigid schema that a data warehouse requires. This flexibility allows organizations to perform advanced analytics, data mining, and AI/ML workloads, helping to unlock new insights that were previously inaccessible.

Data lakes provide an ideal solution for effortlessly scaling as data volumes grow. According to a report by IDC, global data is expected to grow to 175 zettabytes by 2025, making data lakes critical for businesses handling large and diverse datasets.

4. Cost and Budget Considerations

Migrating from a data warehouse to a data lake involves not just technical considerations but also financial ones. Understanding the costs associated with migration, storage, and infrastructure is critical for a successful transition. Below are key budget considerations:

Decouple Storage and Compute

One of the primary benefits of a data lake is the ability to decouple storage from computers. In a traditional data warehouse, storage and compute resources are tightly linked, meaning you must scale simultaneously, even if only one needs expansion.

In a data lake environment, storage and computing are independent.

This means you can store vast amounts of data at a lower cost while only scaling compute resources when you need to run complex analytics or processing tasks. This flexibility allows organizations to optimize their budget by controlling costs more effectively.

For example, cloud platforms like AWS S3 (for storage) and AWS EMR (for computing) offer this separation, giving businesses more cost-effective options.

Migration Costs

Migrating to a data lake involves upfront costs that must be considered in the budgeting phase. These include:

1. Data Transfer Costs: Moving large volumes of data from on-premise or cloud-based data warehouses to a data lake can incur significant transfer costs, especially in cloud environments where egress charges apply.

2. ETL/ELT Development Costs: You may need to reconfigure your existing ETL/ELT pipelines to suit the new data lake environment. This could involve hiring skilled developers, purchasing third-party integration tools, or investing in training for your existing team.

3. Downtime and Risk Mitigation: Depending on your migration strategy (phased or full), downtime or risk mitigation costs may be involved. Planning for potential delays, fallback strategies, and testing phases is essential to avoid unexpected budget overruns.

Infrastructure Costs

The infrastructure to support a data lake may differ significantly from that of a data warehouse. Here are some key areas to focus on:

1. Storage Costs: Data lakes store raw data in their native format, requiring massive storage. Fortunately, cloud-based data lakes provide cost-effective solutions like AWS S3 or Azure Data Lake, where storage costs are relatively low compared to on-premise data warehouses. However, organizations need to budget for the volume of data they expect to accumulate.

2. Compute Resources: Although storage can be cost-efficient, analytics and compute resources can add up. Running machine learning models or performing large-scale analytics on unstructured data requires robust computational power, in-house or via cloud-based services like Amazon EMR, Azure HDInsight, or Google Cloud Dataproc.

3. Maintenance and Monitoring: Ongoing operational costs should be factored into the budget, including monitoring and maintaining the data lake infrastructure. Cloud services offer various pricing models (pay-as-you-go, reserved instances) to control these costs, but businesses need to forecast and plan for ongoing management.

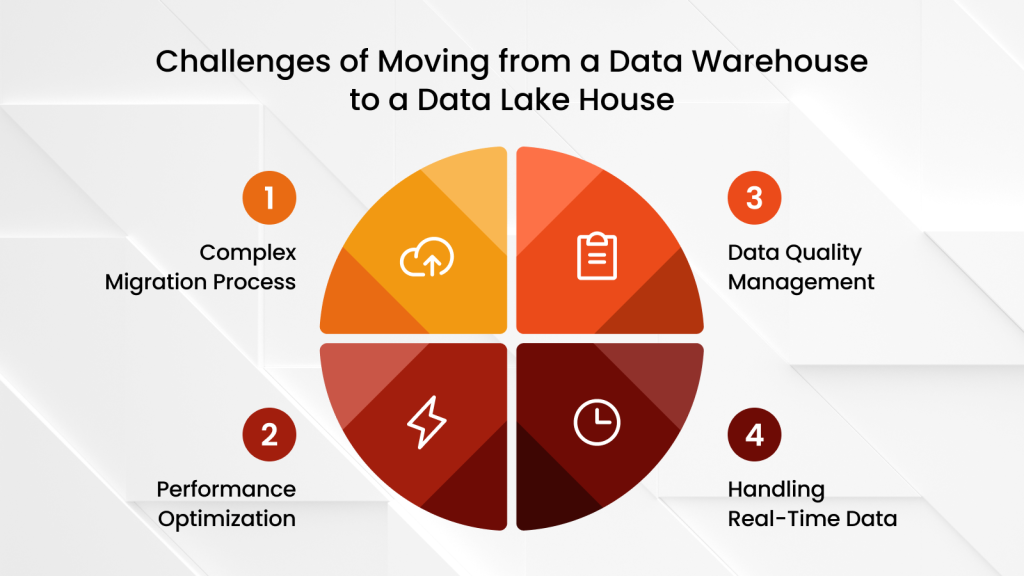

Challenges of Moving from a Data Warehouse to a Data Lake House

Despite the benefits, migrating to a data lake is challenging. Here are a few obstacles businesses might face:

Complex Migration Process

Migrating from a structured data warehouse to a flexible data lake can be complex. Data governance, security, and quality control are more complicated to manage in a more open environment.

Performance Optimization

With proper optimization strategies, ensuring optimal performance in a data lake, especially when handling large-scale queries, can be easy.

Data Quality Management

A significant challenge is maintaining data quality in a data lake, where raw and unstructured data can quickly become disorganized and difficult to manage.

Handling Real-Time Data

Migrating real-time data workloads can be tricky. A data lake must effectively handle streaming data sources, or organizations risk losing critical insights from real-time data analytics.

Tools and Technologies for Data Lake Migration

| Category | Purpose | Examples |

|---|---|---|

| ETL and Data Integration | Extract, transform, and load data into the Data Lake. | – Talend: Scalable ETL solutions with cloud integration. – Informatica: Robust data management. – Apache Nifi: Real-time data flow automation. |

| Cloud Storage Solutions | Scalable and cost-effective storage for Data Lakes. | – AWS Lake Formation: Simplifies setup and management of AWS Data Lakes. – Azure Data Lake Storage: High-performance big data storage. – Google Cloud Storage: Integrated with analytics and ML tools. |

| Big Data Frameworks | Handle large-scale data processing and analytics. | – Apache Hadoop: Distributed storage and processing. – Apache Spark: Real-time processing and ML support. |

| Data Governance and Security | Manage security, compliance, and data lineage. | – Apache Atlas: Metadata management and lineage tracking. – AWS IAM: User access management for AWS Data Lakes. – Azure Purview: Unified data governance. |

| Data Cataloging and Discovery | Organize and discover data efficiently. | – Collibra: Automated metadata management. – Alation: Collaborative data discovery and governance. |

| Workflow Automation | Automate migration and data pipeline workflows. | – Apache Airflow: Schedule and monitor pipelines. – Luigi: Build and manage complex workflows. |

| Monitoring and Observability | Track migration performance and maintain reliability. | – Datadog: Cloud environment monitoring. – Prometheus: Open-source service monitoring. |

| Testing and Validation | Ensure data integrity and accuracy post-migration. | – QuerySurge: Automated data testing. – Great Expectations: Open-source validation tests. |

Key Takeaways: Data Warehouse to Data Lake Migration

Migrating from a data warehouse to a data lakehouse enables organizations to handle larger volumes of structured, semi-structured, and unstructured data with greater scalability, flexibility, and cost efficiency. This guide highlights the benefits of migration, key considerations like architecture, data integration, and budget planning, as well as challenges such as data quality management and real-time processing. It also covers essential tools and technologies to ensure a smooth transition, helping businesses modernize their data strategy, optimize analytics, and gain actionable insights for competitive advantage.

FAQs

What steps are involved in the migration process?

The migration process generally involves:

- Assessing the existing Data Warehouse environment.

- Defining objectives and success metrics.

- Extracting and transforming data into a compatible format for the Data Lake.

- Loading data into the Data Lake.

- Validating the migration to ensure data integrity and usability.

Can I integrate a Data Lake with my existing Data Warehouse?

Yes, integration is possible and often recommended. A hybrid model allows organizations to:

- Leverage the strengths of both systems.

- Use the Data Lake for raw data ingestion and the Data Warehouse for refined analytics.

- Seamlessly transition analytics and reporting capabilities during migration.

Final Words – Choosing the Right Migration Strategy

Migrating from a data warehouse to a data lake offers flexibility, scalability, and cost advantages. However, it requires careful planning, a solid understanding of the organization’s data needs, and the proper infrastructure.

Considering the factors mentioned above and preparing for potential challenges, organizations can achieve a successful migration.

When looking for expert guidance and robust migration solutions, Folio3 Data Solutions offer comprehensive services tailored to your business needs. Our specialist team can guide you through each stage of the migration process, ensuring that your data is securely and efficiently transitioned to a data lake architecture.