Global data creation is growing at an astonishing pace. By 2025, the world is expected to generate more than 80 zettabytes of data, according to IDC’s latest forecast. While this explosion of information presents immense opportunities, it also brings significant challenges: data is often fragmented, inconsistent, and difficult to translate into meaningful action. For businesses trying to make sense of it all, ad-hoc analysis no longer cuts it.

That’s where a data analytics framework comes into play. Think of it as a structured, repeatable system that guides how data is collected, processed, interpreted, and acted upon. Whether you’re building a data analytics governance framework or aligning your data and analytics strategy framework with business goals, having a clear approach is essential to avoid wasted resources and flawed insights.

There’s no one-size-fits-all model. Some frameworks prioritize compliance and governance, while others focus on capability building or business value. Understanding the key components, steps, and strategy behind these frameworks helps organizations work smarter, not harder.

What is a Data Analytics Framework?

A data analytics framework is a structured approach that outlines how an organization collects, manages, analyzes, and applies data to make informed decisions. Instead of approaching analytics as a series of one-off reports or isolated tools, a framework provides a consistent method for turning raw data into usable insights.

At its core, this framework acts as a blueprint defining the steps, tools, and governance structures needed to handle data effectively. It typically includes components like data sources, integration methods, analytical models, visualization practices, and decision-making protocols.

There are various types of frameworks depending on your focus. A data analytics strategy framework, for example, aligns analytics efforts with business goals, while a data analytics governance framework ensures proper access control, data privacy, and regulatory compliance. Meanwhile, a data analytics capability framework assesses the organization’s readiness in terms of skills, infrastructure, and processes.

A well-defined framework not only improves consistency and quality across data projects, it also makes scaling and long-term planning more achievable. Whether you’re in finance, healthcare, or agriculture, adopting a tailored analytics framework helps drive clarity and accountability in data-driven initiatives.

5 Types of Data Analytics Frameworks

Different problems require different kinds of insight, and that’s why not all data analytics frameworks serve the same purpose. Below are five key types, each helping organizations answer different questions from “what happened?” to “what should we do next?”

1. Descriptive Analytics Framework

This framework focuses on summarizing historical data to understand what has already happened. It’s often the starting point in any analytics journey. Tools like dashboards, data visualizations, and KPI reports fall into this category. For example, a retail business might use this to track monthly sales trends or customer footfall.

2. Diagnostic Analytics Framework

Once you know what happened, the next step is to ask why. This type digs deeper using techniques like root cause analysis, correlation analysis, or drill-down reporting. It’s helpful in identifying the drivers behind performance changes, such as declining engagement or revenue.

3. Predictive Analytics Framework

Built on statistical models and machine learning algorithms, this framework forecasts future outcomes based on historical patterns. In healthcare, big data predictive analytics may help flag patients at high risk of readmission by analyzing vast datasets from electronic health records. It requires clean, well-structured data and a strong understanding of the context behind the numbers.

4. Prescriptive Analytics Framework

Going a step beyond prediction, this framework suggests possible actions or decisions based on the data. It’s often used in supply chain optimization or dynamic pricing strategies. Prescriptive frameworks are more complex, involving simulations, decision trees, and sometimes real-time data inputs.

5. Cognitive Analytics Framework

Inspired by human thought processes, this emerging framework integrates AI, natural language processing, and machine learning to interpret unstructured data, such as emails, medical notes, or social media content. Think virtual assistants or AI-based diagnostic tools in healthcare. As healthcare data analytics services continue to evolve, cognitive frameworks offer significant promise for extracting insights from the vast volumes of qualitative and unstructured clinical data.

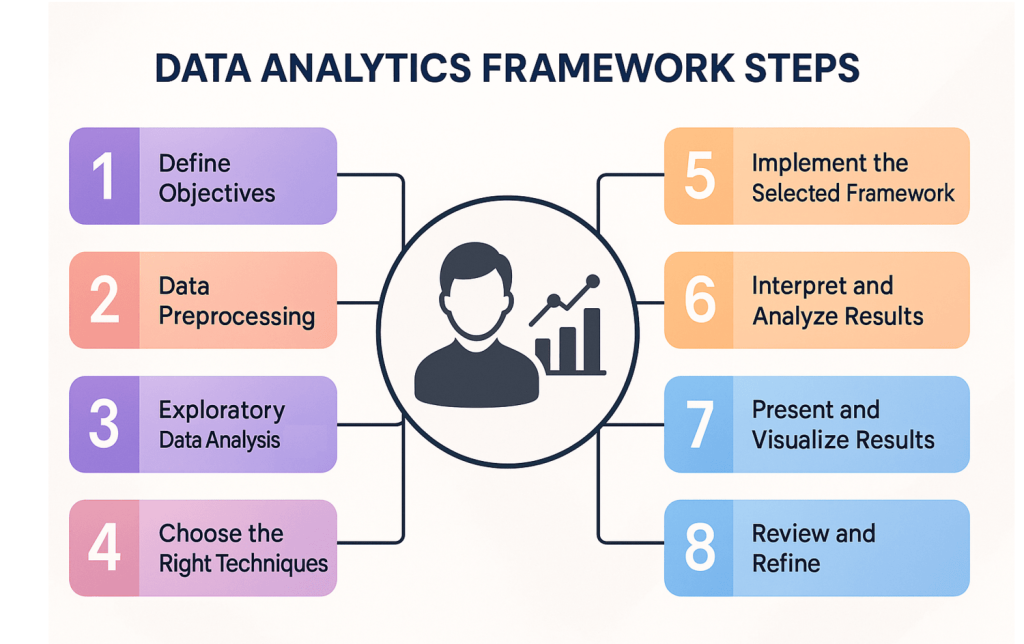

Steps in the Data Analytics Framework

A data analytics framework is only as effective as the process driving it. While tools and methods may vary across industries, the most impactful data analytics solutions follow a consistent set of foundational steps to ensure accuracy, clarity, and real business value.

1. Define Objectives

Before diving into data, it’s essential to clarify the business questions you want to answer. Whether it’s improving customer retention, forecasting demand, or identifying operational bottlenecks, clear goals will shape every step that follows.

2. Data Preprocessing

Raw data is rarely usable in its original form. This stage involves cleaning the data, removing duplicates, handling missing values, and ensuring that datasets from different sources are compatible. A strong data analytics governance framework can help maintain data integrity and compliance at this stage.

3. Exploratory Data Analysis (EDA)

EDA helps uncover patterns, spot anomalies, and test assumptions. Using summary statistics and visual tools, analysts get a feel for the data, often revealing insights or issues that weren’t obvious before.

4. Choose the Right Techniques

Different problems require different analytical approaches. For example, trend analysis and clustering might be suitable for marketing data, while predictive analytics techniques such as regression models and classification algorithms work well for forecasting future outcomes. Your chosen data analytics strategy framework should guide this selection based on your business goals.

5. Implement the Selected Framework

With techniques selected, you now build out the analysis within your chosen framework—applying models, running calculations, and transforming raw data into structured insight.

6. Interpret and Analyze Results

The numbers alone don’t mean much without context. At this stage, it’s important to align results with your original objectives and understand the “so what?” behind the findings. This is often where domain expertise becomes invaluable.

7. Present and Visualize Results

Clear communication is key. Charts, dashboards, and reports should tell a compelling story without overwhelming your audience. This step bridges the gap between data teams and decision-makers.

8. Review and Refine

Analytics is rarely a one-and-done task. Whether you’re working within a data analytics capability framework or a more technical model, regularly reviewing and refining the process ensures your framework stays relevant and reliable as business needs evolve.

Discover how Folio3 helps businesses implement scalable analytics frameworks that turn raw data into powerful insights.

Best Practices for Using Data Analytics Frameworks

Implementing a data analytics framework isn’t just about following steps, but about making thoughtful decisions at every stage. Here are some practical, tried-and-tested best practices to help your analytics efforts deliver real business value and highlight the benefits of data analytics.

1. Establish Well-Defined Objectives

Don’t begin without a clear understanding of what you want to achieve. Vague goals often lead to confusing outcomes. Whether you’re aiming to reduce churn, optimize logistics, or improve forecasting accuracy, precise objectives guide the entire process and shape your data analytics strategy framework.

2. Pick the Best-Fit Framework

There’s no one-size-fits-all. A data analytics governance framework might be ideal for compliance-heavy industries, while a predictive model suits demand forecasting. Match the framework type to the business problem and your organization’s maturity with data.

3. Gather Meaningful and Trusted Data

Garbage in, garbage out. Ensure that the data sources feeding into your framework are timely, relevant, and validated. This improves accuracy and supports stronger insights across your data analytics framework components.

4. Validate, Learn, and Adjust

Even the best frameworks need calibration. Test your models against real outcomes and adjust based on what you learn. Validation helps avoid costly assumptions and ensures your data analytics capability framework continues to evolve with your business needs.

5. Integrate Feedback for Ongoing Improvement

Analytics should never operate in a vacuum. Regularly collect feedback from end users, whether analysts, marketers, or executives, to refine data access, improve visualizations, or remove bottlenecks.

6. Keep Up with the Latest

The landscape of data analytics and digital transformation is constantly evolving. Stay informed about emerging techniques, tools, and privacy regulations to ensure your analytics framework remains scalable, secure, and future-ready.

Popular Data Analytics Frameworks

Choosing the right data analytics framework depends on your goals, data environment, and the skills of your team. Each of these tools and frameworks plays a specific role within the broader data analytics framework ecosystem. Depending on your objectives, from strategy development to implementation, you may use one or a combination of these tools.

Many businesses also turn to data strategy consulting services to help evaluate which frameworks align best with their operational needs and long-term goals.

Below are some of the most widely used and respected frameworks and tools in data analytics, each with its own purpose, strengths, and use cases:

1. CRISP-DM (Cross-Industry Standard Process for Data Mining)

CRISP-DM is a process model that breaks down data projects into six well-defined phases: business understanding, data understanding, data preparation, modeling, evaluation, and deployment. It’s widely adopted across industries because of its structured yet flexible approach. Ideal for teams looking to align analytics efforts with real-world business needs, including areas like customer experience analytics where understanding user behavior and optimizing touchpoints is essential.

Best For: Traditional analytics projects that require a clear, repeatable structure.

2. Hadoop

Apache Hadoop is not a process model, but a big data framework designed to store and process massive datasets across distributed systems. Its ecosystem includes tools like HDFS (for storage) and MapReduce (for processing). Hadoop is a go-to option when you’re working with large-scale, unstructured data.

Best For: Large-scale data environments with a need for distributed storage and processing.

3. SEMMA (Sample, Explore, Modify, Model, Assess)

Developed by SAS, SEMMA focuses heavily on the technical steps of the data mining process. While similar to CRISP-DM, it’s more model-centric, making it ideal for use within statistical and predictive modeling environments.

Best For: Teams with a strong modeling background, particularly in structured environments.

4. Pandas

Pandas is a Python-based open-source data manipulation and analysis library. It provides intuitive data structures like DataFrames and Series that make data cleaning, transformation, and analysis simple and efficient. It’s a core part of most modern data analytics framework components and often plays a key role in shaping a company’s customer data strategy, especially when analyzing structured behavioral or transactional datasets.

Best For: Quick prototyping, data cleaning, and working with structured tabular data in Python.

5. TDSP (Team Data Science Process)

Microsoft’s TDSP is a modern lifecycle approach for collaborative data science projects. It includes planning, data acquisition, modeling, deployment, and customer acceptance. TDSP is especially valuable for enterprise teams needing consistent practices and data analytics governance frameworks.

Best For: Cross-functional enterprise teams working on complex machine learning or AI projects.

6. Scikit-learn

Scikit-learn is a powerful Python library that offers a wide range of machine learning algorithms for classification, regression, clustering, and more. It’s user-friendly and integrates well with other tools like Pandas and NumPy, making it essential in many data analytics capability frameworks.

Best For: Beginners and professionals building machine learning models in Python.

7. Apache Spark

Spark is another distributed data processing framework like Hadoop but with significant speed advantages — especially when dealing with iterative algorithms or streaming data. Spark’s built-in libraries (like Spark SQL, MLlib, and GraphX) make it suitable for a wide range of data analytics use cases, from real-time analytics to advanced machine learning workloads.

Best For: Real-time analytics, machine learning at scale, and iterative data processing.

8. SciPy

SciPy is a Python-based ecosystem used for scientific and technical computing. While it’s broader than just data analytics, its robust set of statistical and mathematical tools supports more advanced analysis tasks when precision is key.

Best For: Statistical modeling, signal processing, and data-heavy scientific research.

Future Trends of Data Analytics Frameworks

The role of data analytics frameworks is expanding well beyond structured dashboards and static reports. Organizations now demand context-aware, real-time decision support systems that bridge analytics with strategy. As data analytics trends evolve, next-generation frameworks are being shaped to meet these expectations.

1. Automation Accelerates Data Processes

Manual ETL pipelines are being replaced by orchestration tools like Apache Airflow and Prefect, automating everything from ingestion to reporting. For example, companies like Netflix use automated data workflows to personalize user experience in real time. Frameworks are embedding such capabilities to minimize human intervention and scale insights faster, reducing time-to-insight by up to 80%, according to studies.

2. Real-Time Analytics for Instant Insights

Real-time frameworks like Apache Kafka and Apache Flink now support streaming analytics pipelines for immediate processing. In healthcare, systems analyze EHR data in real time to detect anomalies such as potential strokes or sepsis onset, sometimes within seconds.

The benefits of real-time analytics include faster decision-making, early risk detection, and enhanced operational efficiency. Frameworks are adapting to handle time-series data, event streams, and alerts with low-latency response times.

3. Advanced Analytics Techniques in Frameworks

Tools such as Scikit-learn, TensorFlow, and XGBoost are being integrated directly into frameworks like TDSP (Team Data Science Process). These allow for embedded machine learning models and algorithm experimentation directly within analytics pipelines. Instead of just tracking what happened, teams now build classifiers and predictive models to forecast sales, customer churn, and system failures—driving both data analytics and optimization in a unified workflow.

4. Smart Dashboards for Actionable Storytelling

Beyond standard visuals, platforms like Tableau Pulse and Power BI Copilot are integrating AI summaries and anomaly explanations. These dashboards adapt to user roles and display only the most relevant KPIs, reducing noise. For instance, operations teams at Walmart use intelligent dashboards that auto-highlight supply chain bottlenecks with prescriptive suggestions, a practice reflecting the rapid growth shown in recent data analytics stats on market adoption.

5. Democratized Access to Analytics

Frameworks now support low-code/no-code integrations, enabling non-technical staff to generate insights through guided workflows. Tools like Google’s Looker Studio and Microsoft’s Power Apps allow business teams to contribute to data analysis without needing to write SQL or Python.

This shift moves analytics from being a centralized function to an organization-wide capability—particularly valuable in B2B analytics, where various departments require access to customized insights for clients, partners, and internal performance tracking.

6. Hybrid Cloud Enables Scalable, Flexible Analytics

Frameworks like Databricks and Snowflake support multi-cloud and hybrid environments, essential for organizations with complex data sovereignty and compliance needs. For instance, a pharmaceutical firm might analyze sensitive trial data on-premises while using public cloud for less sensitive marketing insights — all within the same pipeline.

7. Built-In Governance and Compliance Tools

As frameworks become more accessible, governance frameworks like Apache Ranger and AWS Lake Formation help control access, enforce policies, and audit data usage. These are crucial for complying with regulations like GDPR and HIPAA. Expect future frameworks to offer native support for data lineage, consent tracking, and auto-classification of sensitive fields.

8. Integration with Diverse and Complex Sources

Frameworks now need to integrate with IoT devices, REST APIs, NoSQL databases, and edge devices. For example, agricultural analytics platforms pull weather, soil sensor, and satellite data together to optimize irrigation cycles. In many cases, businesses turn to data integration consulting services to architect solutions that handle this growing complexity. Future frameworks will natively support data fusion from varied formats like JSON, Parquet, or time-series, enabling deeper insights without preprocessing bottlenecks.

Future-proof your business with intelligent data pipelines, predictive modeling, and real-time analytics designed by our data experts.

FAQs

What are the key components of a data analytics framework?

A data analytics framework typically includes objective setting, data collection, preprocessing, analytical techniques, interpretation, and result visualization.

Why is a data analytics framework essential?

It provides a structured approach to turning raw data into actionable insights, reducing complexity and ensuring consistent, reliable outcomes.

How does a data analytics framework benefit businesses?

It helps businesses make informed decisions faster, spot trends, reduce inefficiencies, and align analytics with overall strategy.

Can small businesses use a data analytics framework?

Absolutely. With scalable tools and cloud-based platforms, even small businesses can implement frameworks to improve performance and customer insights.

Conclusion

As data continues to grow in scale and complexity, adopting the right data analytics framework is essential for transforming information into intelligent action. Whether you’re optimizing operations, understanding customer behavior, or planning for long-term growth, a well-structured framework acts as the foundation for smarter, faster decisions.

If you’re looking to implement or upgrade your data analytics capabilities, Folio3 Data Services offers end-to-end support — from data strategy and engineering to visualization and advanced analytics. Our experts tailor frameworks to your business needs, ensuring scalable, secure, and insight-driven results.

Ready to take the next step? Connect with our data experts today.